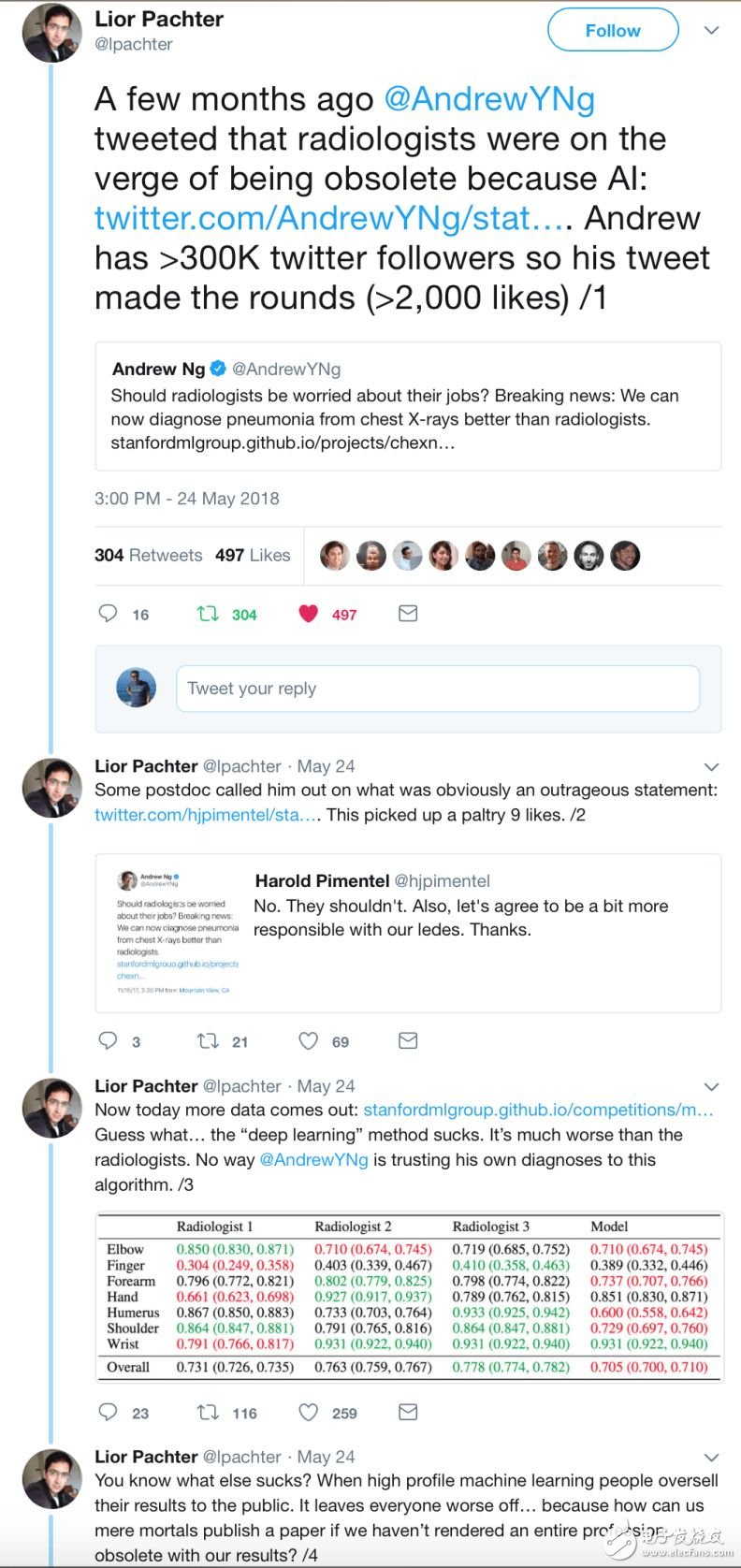

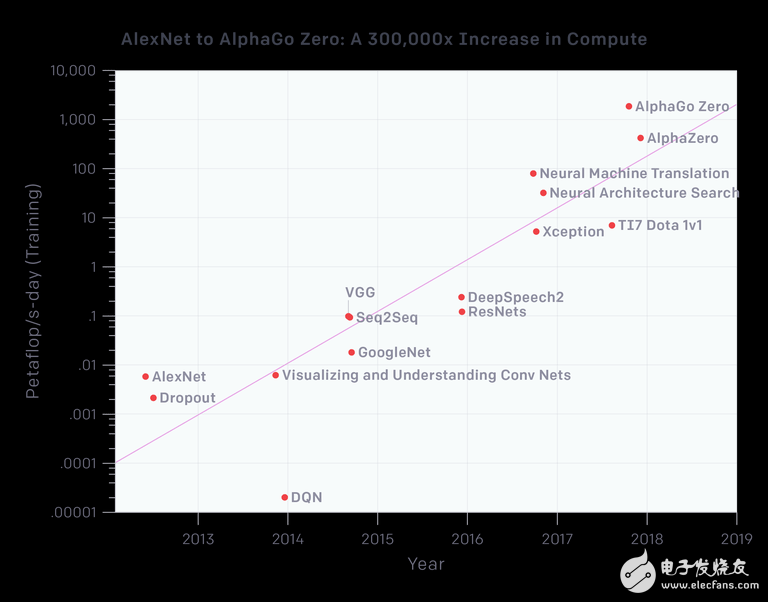

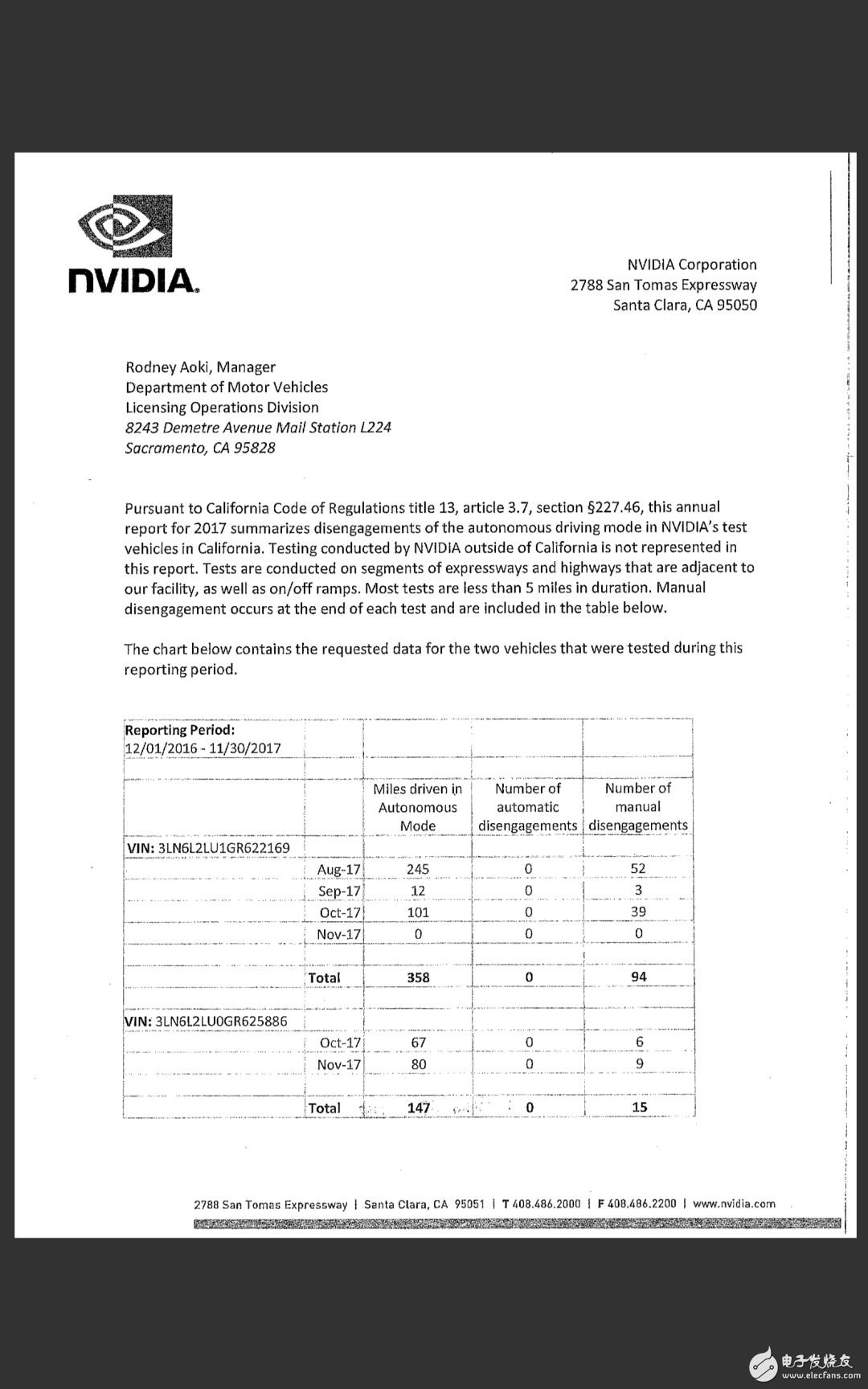

It has been several years since the frontier of the so-called AI revolution; many people used to think that deep learning is a magical "silver bullet" that will take us to the wonderful world of general AI. Many companies gambled in 2014, 2015 and 2016, and in the past few years the industry has been opening new boundaries, such as Alpha Go. Companies such as Tesla announced through their respective facades (CEOs) that fully self-driving cars are just around the corner, so that Tesla began to sell this vision to customers (with future software updates). We are now in the middle of 2018 and the situation has changed. This is not yet apparent on the surface. The NIPS conference is still hard to find. Many public relations personnel still try to promote AI at the press conference. Elon Musk continues to promise to launch autonomous vehicles, Google’s chief executive. The official is still shouting Wu Enda's slogan (AI is more revolutionary than electricity). But this argument is beginning to hold water. As I predicted in my previous article, the most untenable place is autopilot – the practical application of this technology in the real world. Dust has been settled in deep learning When ImageNet is effectively addressed (note: this does not mean that vision has been resolved), outstanding researchers in the field, including even the often low-key Geoff Hinton, are actively interviewing the media and socializing. The media made a big splash, such as Yann Lecun, Wu Enda and Li Feifei. The general idea is that we are facing a huge revolution; from now on, the pace of the revolution will only accelerate. After many years, the tweets of these people have become less active. The following is an example of Wu Enda’s tweets: 2013: 0.413 tweets per day 2014: 0.605 tweets per day 2015: 0.320 tweets per day 2016: 0.802 tweets per day 2017: 0.668 tweets per day 2018: 0.263 tweets per day (as of May 24) Perhaps this is because Wu Enda's bold remarks are now being tortured more severely by the IT community, as shown in the following tweet: Obviously, the momentum of AI has been greatly weakened. Now it is praised that deep learning is the ultimate algorithm with fewer tweets. The paper also lacks the "revolutionary" argument and more "evolutionary" arguments. Since the launch of Alpha Go zero, Deepmind has not produced any exciting results. OpenAI is quite quiet. The last time it shined in the media was to play the game of Dota2. I think it was originally designed to create the same momentum as Alpha Go, but it quickly disappeared. In fact, a lot of articles began to appear at this time, and even Google did not actually know how to deal with Deepmind, because their results were obviously not as practical as originally expected... As for the well-known researchers, they usually met Canada or French government officials ensured that the funds will be available in the future. Jahn Lekun even resigned as the director of the Facebook AI Lab and became the chief AI scientist at Facebook. The shift from big, big companies to government-funded research institutions has made me realize that the interest of these companies (I mean Google and Facebook) for such research is actually slowly diminishing. These are also early signs, not loudly spoken, but body language. Deep learning is not scalable One of the most important slogans in deep learning is that it can be expanded with little effort. We had AlexNet with about 60 million parameters in 2012, and now we may have a model with at least 1000 times the number of parameters, right? Maybe we have such a model, but the question is, is this model 1000 times more powerful? Or even 100 times stronger? A study by OpenAI came in handy: So, from the perspective of visual applications, we see that VGG and Resnets tend to saturate when they use computing resources that are about an order of magnitude higher (actually fewer in terms of the number of parameters). XcepTIon is a variant of Google's IncepTIon architecture, which is actually slightly better than IncepTIon in ImageNet and may outperform other architectures because AlexNet actually solved ImageNet. So in the case of computing resources that are 100 times more than AlexNet, we actually make the architecture almost saturated in terms of vision (accurately image classification). Neural machine translation is a major direction for major Internet search engines. It's no wonder that it gets all the computing resources it can get (although Google Translate is still very bad, but improved). The three most recent points in the above figure show the items related to reinforcement learning, which are suitable for games played by Deepmind and OpenAI. In particular, Alpha Go Zero and the more general Alpha Go have a lot of computing resources, but they are not suitable for practical applications because the data needed to simulate and generate these data-intensive models requires most of these computing resources. . OK, now we can train AlexNet in a few minutes instead of a few days, but can we train MaxNet 1000 times in a few days and get better results in nature? Obviously not... So in fact, this picture, which is designed to show how good the depth learning is, just shows how poorly it is. We can't simply get better results by extending AlexNet. We have to adjust the specific architecture. If we can't get an order of magnitude increase in the number of data samples, the actual extra computing resources can't be changed too much. This type of data sample is actually only available in a simulated gaming environment. Automated driving accidents The biggest blow to deep learning reputation is undoubtedly the area of ​​autonomous vehicles (I have expected this for a long time, such as this article in 2016: https://blog.piekniewski.info/2016/11/15/ai -and-the-ludic-fallacy/). At first, it was thought that end-to-end deep learning is expected to solve this problem in some way. This is a view that Nvidia strongly advocates. I don't think anyone in the world still believes this, but maybe I am wrong. Looking at the disengagement report of the California Vehicle Authority (DMV) last year, the NVIDIA car actually went off 10 miles. I discussed the overall situation in this article in another article (https://blog.piekniewski.info/2018/02/09/av-safety-2018-update/) and with the safety of human drivers. The comparison (explosion is not very good). Since 2016, there have been several accidents in the Tesla autonomous driving system, and several have been fatal. Tesla's autonomous driving system should not be confused with autonomous driving, but at least it relies on the same technology at the core level. As of today, in addition to occasional serious mistakes, it is still unable to stop at intersections, unable to recognize traffic lights, or even travel around the island. It is now in May 2018, and Tesla promised to take a self-driving from the West Coast to the East Coast (this scene did not appear, but rumors that Tesla had tried, but could not make it) has been for several months. A few months ago (February 2018), when asked about the automatic driving from the West Coast to the East Coast, Elon Musk reiterated this on the conference call: “We could have driven from the West Coast to the East Coast, but that would require too much specialized code to make effective changes, but this applies to a particular route, but it is not a general solution. So I think we can repeat it, But if it doesn't work on other routes at all, it's not a real solution." "I am excited about our progress in neural networks. It is a small progress, it seems that it is not much progress, but suddenly it is amazing." Well, take a look at the picture above (from OpenAI), I don't seem to see that great progress. For almost every player in this field, there is no significant increase in the number of miles before the breakout. In fact, the above statement can be understood as: "We don't have the technology to safely carry people from the west coast to the east coast, but if we really want it, we can actually do it... We eagerly hope that the neural network function is very good. There will be some kind of rapid advancement, so that we can get out of shame and a lot of lawsuits." But the most violent poke for the AI ​​bubble was the Uber autopilot crashing into pedestrians in Arizona. From the preliminary report of the National Transportation Safety Board (NTSB), we can see some shocking statements: In addition to the overall system design failures explicitly mentioned in this report, it is surprising that the system took several seconds to determine what exactly was seen in front (whether it was a pedestrian, bicycle, car or something). Rather than making the only reasonable decision in this situation, it is designed to ensure that it does not hit. There are several reasons for this: First, people often express their decisions in words afterwards. Therefore, one person usually says, “I saw a person riding a bicycle, so I turned to the left to avoid him.†A large number of mental physiology literatures give a very different interpretation: one sees his nerves After quickly understanding the obstacles of the system, the system quickly took action to avoid it. After many seconds, it realized what was happening and provided a verbal explanation. "We make many decisions that are not expressed in words every day, and driving involves many such decisions. It is expensive and time consuming to express words. The actual situation is often urgent and is not allowed. This mechanism has evolved by 1 billion." Years have ensured our safety, and the driving environment (although modern) takes advantage of many of these reflections. Since these reflections have not evolved specifically for driving, they can cause errors. A wasp sizzles in the car, causing driver conditions Reflections can lead to many car accidents and deaths. But our ability to understand the basics of 3D space and speed, predict the behavior of agents, and the behavior of real-world objects on the road is a primitive skill, with 100 million years. As before, these skills are as useful today, and they have been significantly enhanced by evolution. But since most of these things are not easy to express in words, they are difficult to measure, so we can't optimize the machine learning system for these aspects... Now it will agree with Nvidia's end-to-end method - learning images -> action mapping, Skip any verbal expression, which is the right approach in some respects, but the problem is that the input space is high-dimensional and the action space is low-dimensional. Therefore, the "quantity" of the "tag" (readout) is extremely small compared to the amount of information input. In this case, it is extremely easy to learn spurious relaTIon, which is indicated by the adversarial example in deep learning. We need a different paradigm. I assume that predicting the entire perceptual input and action is the first step in enabling the system to extract real-world semantics, rather than extracting false relationships. For more information, see my first proposed architecture: Predictive Vision Model (https://blog.piekniewski.info/2016/11/04/predictive-vision-in-a-nutshell/) . In fact, if we learn something from the deep learning pandemic, there are enough false patterns in the (10k+ dimension) image space, which actually have commonality on many images and leave an impression, such as us. The classifiers actually understand what they see. Even top researchers who have been immersed in this field for many years admit that this is far from the truth. Gary Marcus said no to hype I should mention that more celebrities recognize this arrogance and have the courage to openly bombard. One of the most active people in the field is Gary Marcus. Although I don't agree with Gary's point of view on AI, we undoubtedly agree that AI is not as powerful as the deep learning hype propaganda machine. In fact, they are far apart. He has written excellent blog posts/papers: Deep Learning: Critical Assessment (https://arxiv.org/abs/1801.00631) Defending the doubts of deep learning (https://medium.com/@GaryMarcus/in-defense-of-skepticism-about-deep-learning-6e8bfd5ae0f1) He analyzed the deep learning hype in great depth and detail. I respect Gary very much. His performance is like a real scientist. Most people who are called "deep learning stars" behave like third-rate stars. Conclusion Forecasting AI's winter is like predicting a stock market crash – it's impossible to accurately predict when it will happen, but it's almost certain that it will happen at some point. Just as the stock market crashed, there were signs that the stock market was about to collapse, but the depiction of the outlook was so tempting that it was easy to ignore these signs, even if they were clearly visible. In my opinion, there are already obvious signs that deep learning will cool down a lot (perhaps in the AI ​​industry, the term has been abused endlessly by corporate propaganda machines), and such signs are clearly visible, but most people The more and more seductive depiction blinded his eyes. How much "deep" will there be in that winter? I do not know. What will happen next? I do not know either. But I am sure that AI will come in winter, maybe it will arrive sooner, not later.

ZGAR Aurora 1800 Puffs

ZGAR electronic cigarette uses high-tech R&D, food grade disposable pod device and high-quality raw material. All package designs are Original IP. Our designer team is from Hong Kong. We have very high requirements for product quality, flavors taste and packaging design. The E-liquid is imported, materials are food grade, and assembly plant is medical-grade dust-free workshops.

Our products include disposable e-cigarettes, rechargeable e-cigarettes, rechargreable disposable vape pen, and various of flavors of cigarette cartridges. From 600puffs to 5000puffs, ZGAR bar Disposable offer high-tech R&D, E-cigarette improves battery capacity, We offer various of flavors and support customization. And printing designs can be customized. We have our own professional team and competitive quotations for any OEM or ODM works.

We supply OEM rechargeable disposable vape pen,OEM disposable electronic cigarette,ODM disposable vape pen,ODM disposable electronic cigarette,OEM/ODM vape pen e-cigarette,OEM/ODM atomizer device.

Aurora 1800 Puffs,ZGAR Aurora 1800 Puffs Pod System Vape,ZGAR Aurora 1800 Puffs Pos Systems Touch Screen,ZGAR Aurora 1800 Puffs Disposable Vape Pod System,1800Puffs Pod Vape System ZGAR INTERNATIONAL(HK)CO., LIMITED , https://www.zgarette.com