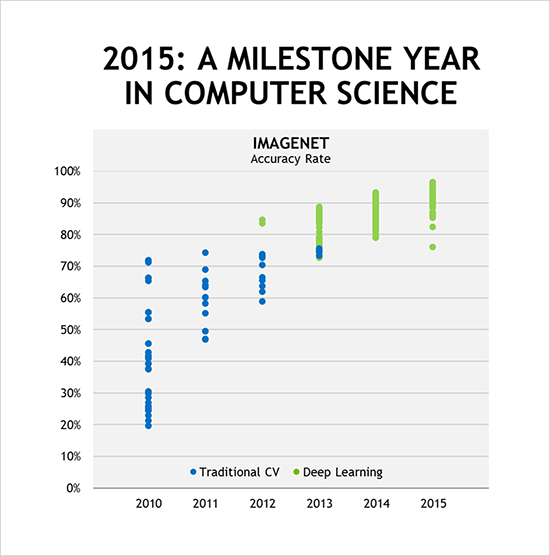

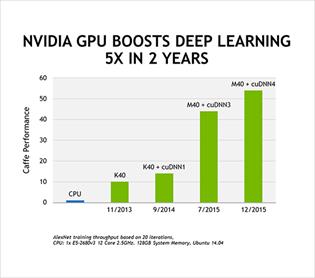

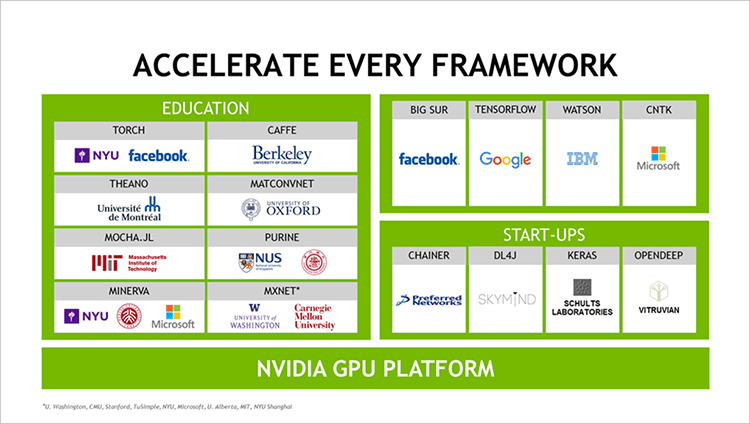

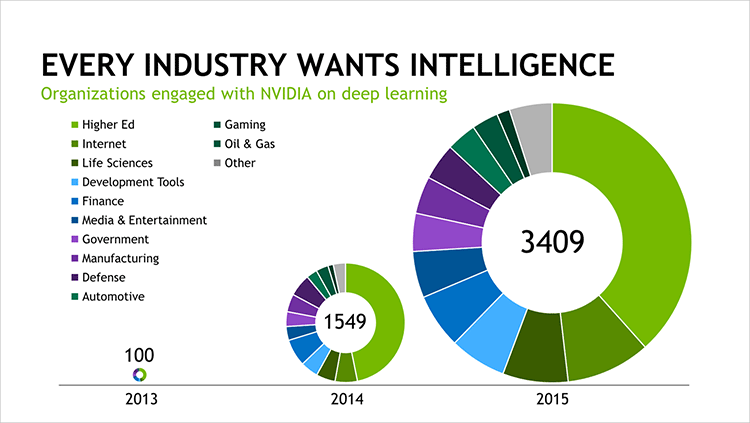

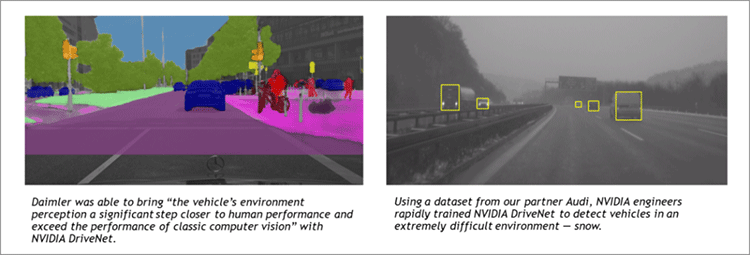

IT168 News New York University this week had an annual symposium to discuss the future of artificial intelligence. Yann LeCun invited NVIDIA co-founder and CEO Jen-Hsun Huang to speak at the symposium. This perfect event brought together many of the leaders in the field of artificial intelligence to explore the current state of the art of artificial intelligence and the ongoing progress. The following is the topic of Mr. Huang Renxun's speech, that is, why deep learning is a new software model that requires a new computing model; why artificial intelligence researchers use GPU-accelerated computing models; as this model rapidly becomes popular, NVIDIA promotes artificial intelligence. The progress is being carried out. And why did artificial intelligence take off after so many years of development? Major changes Artificial intelligence has always been the ultimate frontier of our research and development work since we designed the computer. In the past 50 years, building intelligent machines that can perceive the world as humans, understand human languages, and learn from examples have been the lifelong work of computer scientists. However, the major changes in modern artificial intelligence (ie deep learning) are facilitated by the combination of Yann LeCun's convolutional neural network, Geoff Hinton's back propagation, stochastic gradient descent training, and Wu Enda's massive use of GPUs. Accelerate deep neural network (DNN) practices. At the same time, NVIDIA is busy pushing the development of GPU-accelerated computing. GPU-accelerated computing is a new computing model that uses massively parallel graphics processors to accelerate applications with parallel features. Scientists and researchers turned to GPUs to perform molecular-level simulations to determine the effectiveness of life-saving drugs, to present our organs in 3D graphics (using a few CT scans to reconstruct images), or to run galactic-level simulations to find out where our universe is running. The law. A researcher who used our GPU to run quantum color dynamics simulations once told me: "Thanks to NVIDIA's achievements, I can now complete what I want to achieve throughout my lifetime." This benefits people. many. Making people have the ability to create a better future has always been our mission. NVIDIA GPUs have made supercomputing popular, and researchers have now discovered the GPU's powerful processing capabilities. In 2011, artificial intelligence researchers discovered NVIDIA GPUs. At that time, the Google Brain project had just achieved amazing results. It learned to recognize cats and humans by watching movies on YouTube. But it needs to use 2,000 server CPUs in a large Google data center. The operation and cooling of these CPUs are very energy-intensive. Few people have computers of this size. NVIDIA and its GPU appear in people's minds. Bryan Catanzaro of the NVIDIA Research Institute teamed up with Stanford University’s Nanda Group to apply GPUs to deep learning. As it turns out, 12 NVIDIA GPUs provide deep learning performance equivalent to 2,000 CPUs. Researchers at New York University, the University of Toronto, and the Swiss Artificial Intelligence Laboratory have accelerated their Deep Neural Networks (DNNs) on the GPU. Since then, everyone has been competing against each other. Deep learning creates miracles Alex Krizhevsky of the University of Toronto won the 2012 ImageNet Computer Graphics Recognition Contest. (1) Krizhevsky defeated the artificial software written by computer vision experts on a large scale. Krizhevsky and his team did not write computer vision code. Instead, by using deep learning, their computers learn to recognize images themselves. They designed a neural network called AlexNet and trained it with 1 million instance images. The training process required trillions of mathematical operations on the NVIDIA GPU. Krizhevksy's AlexNet successfully defeated the best man-made software. The AI ​​competition starts here. As of 2015, people have again achieved a major milestone. Using deep learning, Google and Microsoft both beat the human best in the ImageNet Challenge. (2,3) is not a man-made program but a human being. Shortly thereafter, Microsoft and the University of Science and Technology of China released a deep neural network, which achieved a college graduate-level IQ test score. (4) Baidu subsequently announced the release of a deep learning system called Deep Speech 2, which uses a single algorithm to learn English and Chinese. (5) All of the best results in the 2015 ImageNet competition were based on deep learning, both running on GPU-accelerated deep neural networks, many of which defeated human precision. In 2012, deep learning defeated man-made software. As of 2015, deep learning has achieved “superhuman†perception. New computing platform for new software models Computer programs contain many commands, which are mostly executed in order. Deep learning is a brand new software model in which billions of software neurons and trillions of connections are trained in parallel. Run the DNN algorithm and learn from the examples, which are essentially writing their own software. This vastly different software model requires new computer platforms to operate efficiently. Accelerated computing is an ideal way, and GPUs are ideal processors. As Nature recently pointed out, the early results in deep learning are due to the advent of “high-speed graphics processors (GPUs) that are easy to program and allow researchers to increase the speed of their training network by 10 or 20 times." (6) Performance, programming efficiency, and openness are key factors in building a new computing platform. performance. NVIDIA GPUs are inherently good at handling parallel tasks that can increase the speed of the DNN by 10-20 times, reducing the time required for repeated training from weeks to days, and we have not stopped there. By collaborating with artificial intelligence developers, we are constantly improving our GPU design, system architecture, compilers, and algorithms and in the short span of three years we have increased the speed of training deep neural networks by 50 times. Moore's Law is much faster. We expect that training speed will increase by another 10 times in the next few years. Programmability. Artificial intelligence innovation is developing rapidly. Easy programming and developer efficiency are crucial. The programmability and richness of the NVIDIA CUDA platform enables researchers to innovate quickly to create new configurations of CNN, DNN, deep-aware networks, RNNs, LSTM, and reinforcement learning networks. Availability. Developers want to develop or deploy anywhere. NVIDIA GPUs are available at every computer OEM from around the world; they are found in desktops, laptops, servers, or supercomputers; they can be seen in the cloud of companies such as Amazon, IBM, and Microsoft. From internet companies to research institutions to start-up companies, all major artificial intelligence development frameworks support NVIDIA GPU acceleration. Regardless of which artificial intelligence development system users prefer, the system can run faster with GPU acceleration. We have also developed GPUs for various form factor computing devices so that DNNs can achieve various types of smart machines. GeForce is for personal computers, Tesla for clouds and supercomputers, Jetson for robots and drones, and DRIVE PX for cars. All these GPUs share the same architecture and can accelerate deep learning. All industries need intelligence Baidu, Google, Facebook, and Microsoft are the first companies to use NVIDIA GPUs for deep learning. This artificial intelligence technology responds to what you say, translates voice or text into another language, recognizes images, and automatically tags images. News delivery can be recommended based on each of us preferences and interests. Entertainment and products. Startups and established companies are now facing the use of artificial intelligence to build new products and services or use artificial intelligence to improve their business operations. In just two years, companies that have cooperated with NVIDIA in deep learning have increased nearly 35-fold to more than 3,400 companies. Healthcare, life sciences, energy, financial services, automotive, manufacturing, and entertainment industries will all benefit from the analysis of massive data. And, as Facebook, Google, and Microsoft open up their deep learning platform for everyone, artificial intelligence-based applications will quickly spread. In view of this trend, "Link" magazine recently published an article predicting "the rise of the GPU." Self-driving car. Whether it is to enhance human capabilities with superhuman autopilots, or to revolutionize personal travel services, or to reduce the need for people to expand parking lots in cities, self-driving cars can bring enormous social benefits. Driving is a complicated task. Unexpected things will always come unexpectedly. For example, freezing rain turns the road into an ice skating rink, and the road leading to the destination will be closed and children will run from the car. You can't program software to predict every situation a self-driving car might encounter. This is where the value of deep learning lies. It can learn, adapt and improve. We are building an end-to-end deep learning platform called NVIDIA DRIVE PX for self-driving cars, from the training system to the onboard AI. These results are very exciting. In the future, superhuman computer autopilots and driverless commuter cars will no longer be sci-fi scenes. robot. FANUC is a leading manufacturer of industrial robots. The company recently demonstrated an assembly line robot that can learn to "pick" randomly oriented objects from boxes. GPU-based robots complete the learning process through trial and error. This deep learning technology was developed by Preferred Networks, which recently boarded the Wall Street Journal and the article titled "Japan wants to use AI to rejuvenate technology." Medical and life sciences. Deep genomics is using GPU-based deep learning to understand how genetic variation causes disease. Arterys uses GPU-based deep learning to accelerate medical image analysis. Its technology will be used in GE Healthcare's MRI machines to help diagnose heart disease. Enlitic is using deep learning to analyze medical images to identify tumors, fractures that are barely visible to the naked eye, and other conditions. There are many such examples, almost thousands. Accelerating Artificial Intelligence with GPUs: A New Computing Model Breakthroughs in deep learning have triggered the artificial intelligence revolution. Machines based on artificial intelligence deep neural networks can solve complex problems that cannot be solved by human programmers. These machines can learn from the data and then make improvements in use. Even if the user is not a programmer, he can train the same DNN to solve new problems. Its development is very rapid, and the degree of adoption has been constantly increasing. We firmly believe that its impact on society will also grow rapidly. A recent KPMG study predicts that computerized driver assistance technology will help reduce auto accidents by 80% in 20 years, equivalent to saving nearly 1 million lives each year. Deep learning artificial intelligence will be the basic technology in this field. Its impact on the computer industry will also grow rapidly. Deep learning is a new software model. So we need a new computing platform to run it, an architecture that can efficiently execute coded instructions for programmers and can train deep neural networks on a large scale. We firmly believe that GPU accelerated computing will be the only way to achieve this. Popular Science magazine recently referred to the GPU as "the modern force of modern artificial intelligence," and we think so. 12 volt lithium marine battery,optima marine battery,lithium ion marine battery,autozone marine battery,lithium deep cycle marine battery,12v battery deep cycle marine EMoreShare International Trade (Suzhou) Co., Ltd , https://www.emoresharesystem.com