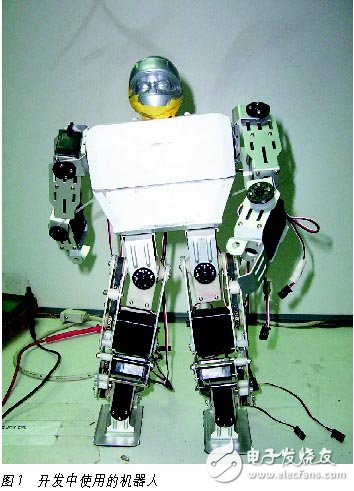

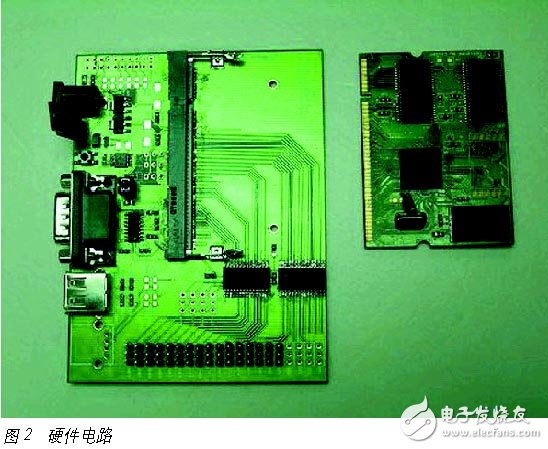

With the increasingly close integration of Internet technology, information appliances, industrial control technology, etc., the integration of embedded devices and the Internet has become a general trend. During this period, new microprocessors emerged in an endless stream, requiring the design of embedded operating systems to be more portable and support more microprocessors. The development of embedded systems requires powerful hardware development tools and software support packages. New technologies and concepts used on general-purpose computers have gradually been transplanted into embedded systems, such as embedded databases, mobile agents, and real-time CORBA. Various embedded Linux operating systems are developing rapidly under the joint efforts of millions of enthusiasts around the world. Due to the open source code, small system kernel, high execution efficiency, strong network functions, and friendly multimedia human-computer interaction interface, etc., It is very suitable for the needs of embedded systems such as information appliances. Early embedded system design methods usually adopted the "hardware first" principle, that is, under the condition of only roughly estimating the requirements of the software tasks, the hardware design and implementation should be carried out first. Then, perform software design on this hardware platform. Therefore, it is difficult to make full use of software and hardware resources to achieve the best performance. At the same time, once the design needs to be modified, the entire design process will be repeated, which has a great impact on the cost and design cycle. This traditional design method can only improve the performance of the software/hardware. It is impossible to make a better overall performance optimization of the system in the limited design space, and it depends to a large extent on Based on the designer’s experience and trial and error. Since the 1990s, with the increasingly powerful and miniaturized functions of electronic systems, hardware and software are no longer two completely separate concepts, but are closely integrated and interact with each other. Therefore, the software and hardware coordination (codesign) design method has emerged, that is, the use of unified methods and tools to describe, synthesize and verify software and hardware. Under the guidance of system goals and requirements, through the comprehensive analysis of system software and hardware functions and existing resources, the software and hardware architecture is collaboratively designed to maximize the system's software and hardware capabilities and avoid all kinds of problems caused by independent design of the software and hardware architecture. Disadvantages, get an optimized design scheme with high performance and low cost. Based on the development of networks, communications, and microelectronics, as well as the unstoppable demand for digital information products, embedded technology has a broad development and innovation space. (1) The system requirements of low power consumption, high performance, and high reliability are an opportunity for my country's chip design. The domestic CPU, system on chip (SoC), and network system on chip (NoC) led by embedded processors will have great development. (2) Linux is gradually becoming the mainstream of embedded operating systems; J2ME technology will also have a profound impact on the development of embedded software. At present, free software technology is very popular, and it has exerted a huge impetus on the development of software technology. The embedded operating system kernel not only needs to have basic features such as miniaturization and high real-time performance, it will also develop in the direction of high credibility, adaptability, and componentization; the supporting development environment will be more integrated, automated, and humanized; the system will be more integrated, automated, and user-friendly; The functional support of software for wireless communication and energy management will become increasingly important. In recent years, operating systems developed to enable embedded devices to support Web services more effectively have been continuously introduced. This operating system adopts component-oriented and middleware technology in its architecture to provide support for the dynamic loading of application software and even hardware, the so-called "plug and play", which shows that it overcomes the limitations of previous embedded operating systems Obvious advantages. (3) Java virtual machine and embedded Java will become powerful tools for developing embedded systems. The multimedia of embedded system will become a reality. Its application in the network environment has become an irresistible trend and will occupy the dominant position of network access equipment. (4) The combination of embedded system, artificial intelligence and pattern recognition technology will develop a variety of more humane and intelligent actual systems. Smart phones, digital TVs, and embedded applications of automotive electronics are the entry points for this opportunity. With the development of network technology and grid computing, "ubiquitous computing" centered on embedded mobile devices will become a reality. Whether in industrial control or in the commercial field, robotics technology has been widely used. Embedded systems are inseparable from traditional industrial robots used for production and processing to modern entertainment robots that enrich people's lives. Most of the existing robots use single-chip microcomputers as the control unit, with 8-bit and 16-bit being the most common ones. The processing speed is low, there is no operating system, and rich multitasking functions cannot be realized. The potential of the system has not been fully explored and application. The goal of the ARM9-based robot vision system is to transplant and configure the Linux operating system on the selected S3C2410 platform, make a suitable file system according to the characteristics of the platform and the application, and build a stable software and hardware development environment for the robot vision system. Secondly, write an application program, collect images from the USB camera in real time through the S3C2410 platform, and use the powerful computing power of this embedded processor to perform post-processing on the image to complete target recognition and positioning, as the input of the robot action unit. Finally, for the motors used in the robot joints, a specific device driver is written to ensure that the operating system can accurately control the robot's actions, respond to the results of the vision processing, and develop a complete "robot vision system". 1. Selection and construction of hardware platform The robot system is shown in Figure 1. The whole body is composed of 24 steering gears, which control 24 joints. Through the control of the steering gear, the action of the robot can be realized. (1) Vision system A USB camera is used as a visual acquisition device. Its advantage is that the interface is universal, the driver is abundant, and the transmission rate is fast. At the same time, the Linux operating system has better support for USB devices, which facilitates the writing and debugging of application programs. The WebEye v2000 camera uses the ov511 chip (there is a corresponding driver in the Linux source code), which is suitable for development. A high-end 32-bit embedded microprocessor is used here: the S3C2410 chip (produced by Samsung) based on the ARM architecture, with a main frequency of 200MHz. It provides a wealth of internal equipment: separate 16kB instruction Cache and 16kB data Cache, MMU virtual memory management, LCD controller, support for NANDFlash system boot, system manager, 3-channel UART, 4-channel DMA, 4-channel PWM timer, I/O port, RTC, 8-channel 10-bit ADC and touch screen interface, IIC-BUS interface, USB host, USB device, SD host card and MMC card interface, 2-channel SPI and internal PLL clock multiplier. S3C2410 uses ARM920T core, 0. 18μm process CMOS standard macro cell and memory cell. (2) Hardware platform composition See Figure 2. A core motherboard, equipped with CPU, 16MB NORFlash, 64MB NANDFlash, 32MB SDRAM, and set the system to boot from NANDFlash; a peripheral circuit board, responsible for the connection of the system and peripheral devices, is equipped with 2 USB interface, 1 UART port, 24 3-pin sockets (used to control the joints of the robot), power interface, etc. The core mother board and the peripheral circuit board are connected through a memory slot. The advantages of separate design are: upgrading the core motherboard can improve the processing capacity of the system; and replacing the peripheral circuit can adapt to different applications. This greatly saves hardware costs and is also very beneficial for development and debugging. At the same time, the core motherboard itself is a minimal system. In the design of embedded systems, ensuring the reliability of the minimal system is the first step in development. Debug the core motherboard and peripheral circuits to work normally. Ensure that the development board communicates with the PC (using the HyperTerminal tool under Windows, connect the development board and the PC through the serial cable). 2. Software platform construction and configuration At present, more and more embedded systems adopt Linux as the operating system. Linux has powerful functions, stable operation, complete drivers, flexible configuration, and compact kernel. It has always been inseparable from embedded systems. There are many Linux kernel versions, among which the 2.4 series are relatively mature, widely used in embedded platforms, and complete information. Linux-2. 4.18-rmk7-pxa1 version is used here. a. Configure the development board software environment Burn the system boot program (commonly known as bootloader, whose function is equivalent to the BIOS in the PC) into the S3C2410 core motherboard. The vivi program recommended by Samsung is used here. Through the setting of the parameters in vivi, the partition of the Flash is completed. b. Configure, compile and download the kernel (1) Download the source code and establish a cross-compilation environment on the PC side; armv4l-unknown-linux-gcc can compile the Linux kernel into binary code suitable for the ARM architecture; (2) Configure the kernel: use the makemenu-config command to statically compile the USB device support, USB camera driver (for OV511 chip), NANDFlash driver, and the driver required for mounting the embedded file system into the kernel; (3) Compile the kernel: use a cross-compilation tool to compile the source code into an executable binary kernel image and generate the file zImage; (4) Download the kernel: download zImage to the kernel partition of Flash (kernel) by using the data programming function of vivi through the serial line; c. Make a file system Common file systems in embedded systems include CRAMFS, JFFS, JFFS2, YAFFS, etc. Taking into account the actual needs, CRAMFS is used here. In the kernel configuration, the CRAMFS driver code is statically compiled, and the mkcramfs tool is used to make a carefully tailored file system image, and the vivi programming instruction is used to download it to the root partition of the Flash. The final file system image is less than 3MB, which is determined by the relatively tight storage resources of the embedded system. Start the system, through the PC's hyper terminal, you can see the startup information: including the version of the kernel, the Flash partition table, the version of the cross compiler, and the statically compiled components in the kernel. 3. Driver writing and application development For the final realization of the vision function, it is first necessary to write a driver for the joint motors of the robot, so that the operating system can complete the control of the robot's actions as a response to the vision result. Vision is by no means the ultimate goal, but a way for robots to obtain information. Its fundamental purpose is to provide strategy or data support for robot actions and behaviors. Mere vision has no meaning. The joints of the robot's body are all steering gears. The steering gear is simple in structure and easy to control. There are only 3 external pins: power supply, ground, and PWM signal. The control of the steering gear is actually to generate PWM waves with appropriate frequency and pulse width. The S3C2410 chip integrates 4 PWM generating units. The driver uses one of them as the control signal source of the robot head motor, by rewriting the value of the register, changing the frequency and duty cycle, and generating the desired PWM wave. The driver is cross-compiled into a module and inserted into the kernel dynamically after the system is started. The module is independent of the kernel before loading, which facilitates the debugging of the driver. Write a short test program to confirm that the joint motor can work normally. Here is a practical application scenario for the robot vision system: the moving target ball moves in the background, it is expected that the robot can recognize the target and locate it, and finally control the head to follow the target movement (as if the robot is staring at the moving target to observe ). The main functions of the robot vision processing program are: (1) Read the video data from the USB camera in real time and perform simple preprocessing; (2) The image processing is then carried out, mainly to complete the image enhancement in the spatial domain. Extract the target ball from the background by binarizing the image; (3) Calculate the position of the target, and then calculate the rotation angle of the robot's head. Through the steering gear driver program, control the robot's head to rotate to the angle of the target to realize the tracking of the target object. After experiments, the robot head can track the target well, and the vision prototype system is realized. 4. Expansion work The development of robot vision system is only one aspect of the application of embedded systems in the field of robotics. In fact, there are many subsystems worthy of our continued implementation, such as voice systems (voice recognition, voice output), walking control (design algorithms to achieve smooth walking), network systems (the robots in the future will no longer be independent Individual and multi-robot collaborative work is an inevitable trend; at the same time, the connection between robots and other devices is becoming more and more urgent) and so on. It should be admitted that although the current embedded processors already have relatively powerful functions, due to the limitations of power consumption, size, and cost factors, their speed still cannot meet the demand in real-time video (audio) processing, multimedia collaborative computing, etc. ; Therefore, a more powerful embedded processor is also an important consideration when selecting a control unit for a robot in the future. From the robot vision system described in this article, we can see the powerful functions and broad application fields of the embedded system. In today’s post-PC (Post-PC) era with the rapid development of digital information and network technology, embedded systems have been widely used in mobile computing platforms (PDAs, handheld computers), information appliances (digital TVs, set-top boxes, network equipment), wireless Communication equipment (smart phones, stock receiving equipment), industrial/commercial control (smart industrial control equipment, POS/ATM machines), e-commerce platforms, and even military applications, and many other fields, its prospects are undoubtedly very optimistic. Fully Transparent Liquid Crystal Display Fully Transparent Liquid Crystal Display,Industrial Instrument Lcd Display,Household Appliances Lcd Display,Fire Facility Instrument Display Dongguan Yijia Optoelectronics Co., Ltd. , https://www.everbestlcdlcm.com