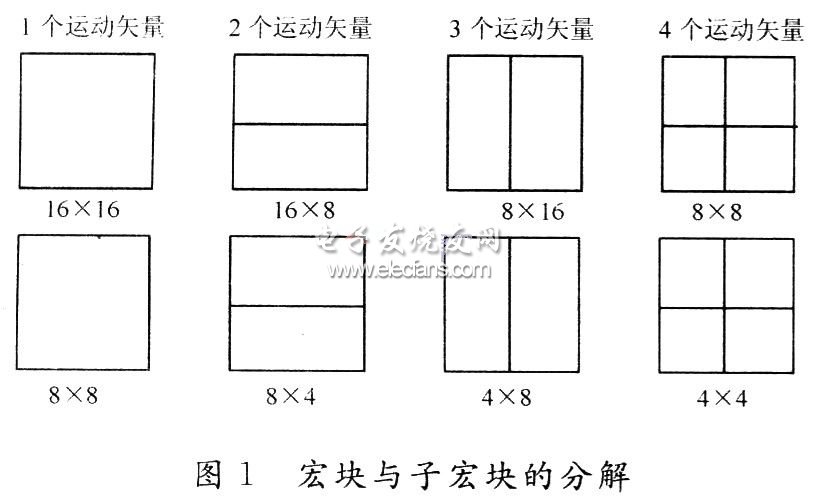

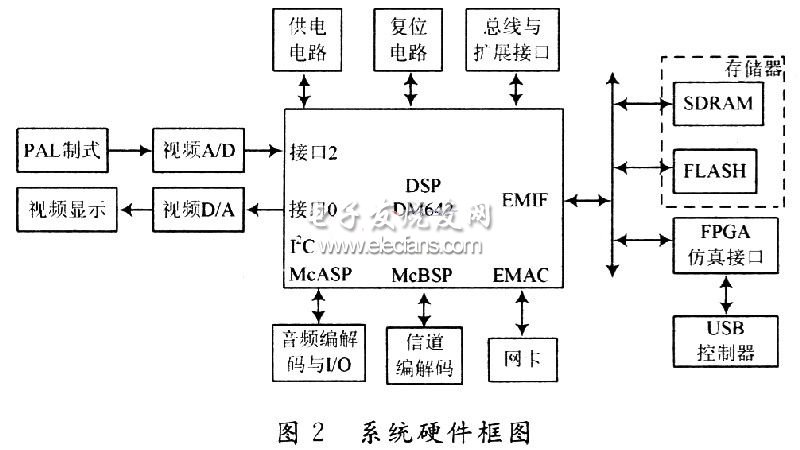

introduction The prospect of the Internet-based digital video industry is promising, and the large-scale deployment of 3G will also promote mobile video communications to become a reality. However, the digitized video image has a huge amount of data, which causes great difficulties for image storage and transmission. The digital video industry refers to the cultural creativity and communication industry based on digital video in digital content, as well as the multidisciplinary high-tech technology support and guarantee service industry that it must rely on. To this end, based on the spatial correlation between adjacent pixels and adjacent lines of the intra-frame image and the temporal correlation between the moving images between adjacent frames, the expert group uses compression coding technology to convert the visual images of the human eye and the human ear. The less important things and redundant components of auditory sound are discarded, which reduces the amount of data stored, transmitted and processed, improves the utilization of spectrum resources, and makes digitization a reality. Digital video compression coding technology is the key technology to solve this problem. With its good network adaptability, high coding compression efficiency, and flexible syntax configuration, H.264 is more suitable for the development direction of video processing in the field of video processing than previous video coding standards, and more suitable for objects in different application environments. The compression efficiency and image playback quality of the encoding algorithm are further improved. In the case of the same subjective perception by the naked eye, the coding efficiency of H.264 is improved by about 50% compared to H.263. Using a high-performance digital signal processor (DSP) to implement the H.264 real-time encoder is a fast and effective method, which helps the rapid promotion and application of the H.264 video standard, and also points out the latest research in the field of video image compression direction. Digital Signal Processing (DSP) is an emerging discipline that involves many disciplines and is widely used in many fields. Since the 1960s, with the rapid development of computer and information technology, digital signal processing technology came into being and has developed rapidly. Digital signal processing is a method of processing real-world signals by performing transformations or extracting information using mathematical techniques, which are represented by digital sequences. In the past two decades, digital signal processing has been widely used in communications and other fields. DSP (digital signal processor) is a unique microprocessor that uses digital signals to process large amounts of information. Its working principle is to receive an analog signal and convert it to a digital signal of 0 or 1. Then modify, delete, and strengthen the digital signal, and interpret the digital data back to analog data or the actual environment format in other system chips. 1 Key technologies of H.264 encoding 1.1 Motion vector estimation and compensation based on flexible partitioning of macroblocks (MB) and the compression effect of increased transformation H.264 adopts the method of combining DC transform of luma block, DC transform of chroma block and ordinary difference transform according to the coding characteristics of macroblock. In motion estimation, H.264 source coding uses integer transform based on 4 & TImes; 4 blocks, which can flexibly select the block size. The pixel blocks of other standard processes are all 16 & TImes; 16 or 8x8. H.264 adapts different sizes to different application environments and requirements, adopting 16 & TImes; 16, 16 & TImes; Three modes; when divided into 8 × 8 mode, it can be further divided into three sub-macroblock division modes of 8 × 4, 4 × 8, and 4 × 4, as shown in FIG. 1. According to the need to be executed by macroblocks of different sizes, the use of integer transformation can make the division of moving objects more accurate, and can not reduce the convergence error of the edges of moving objects. Dealing with occasions that require more details of movement, that is, introducing smaller The motion compensation block can improve the prediction quality in general and special cases, it can improve the subjective visual effect, and at the same time reduce the amount of calculation in the transformation process. Experiments show that the application of 7 blocks of different sizes and shapes can improve the compression rate by more than 15% compared with the encoding using only 16 × 16 blocks. 1.2 Support motion estimation with l / 4 pixel or l / 8 pixel accuracy The motion estimation and compensation algorithm is the most critical part of the current video compression technology, which affects the encoding speed, quality and code rate, and its encoding complexity is also the highest in the entire encoding system. In H.264, the predicted value of the 1/2 pixel position is obtained by interpolation of the 6th order FIR filter. FIR (Finite Impulse Response) filter: a finite-length unit impulse response filter, which is the most basic component in a digital signal processing system. It can have strict linear phase frequency characteristics while guaranteeing arbitrary amplitude-frequency characteristics, and its unit The sampling response is finite in length, so the filter is a stable system. Therefore, FIR filters are widely used in the fields of communication, image processing, and pattern recognition. When the 1/2 pixel value is obtained, the value of the 1/4 pixel position is obtained by taking the average of the integer pixel position and the pixel value of the 1/2 pixel position. In the case of high bit rates, it provides motion estimation with 1/8 pixel accuracy. Using high-precision motion estimation will further reduce inter-frame prediction errors, reduce the number of non-zero bits after transformation and quantization, and improve coding efficiency. Using 1/4 pixel spatial accuracy can improve the coding efficiency by 20% compared to the original one pixel accuracy (integer accuracy) prediction. 1.3 Multi-reference frame prediction The reference frame is the basis of inter-frame prediction coding, that is, motion compensation. According to its positional relationship with the frame to be predicted, it can be divided into a forward reference frame and a backward reference frame. In the past codec technology, when inter-prediction is performed on P-frame images, only the previous frame image is allowed to be encoded, that is, the previous I-picture or P-picture is used as the reference frame, and only the reference is allowed when predicting B-picture Encode the front and back frame pictures, that is, the two previous I pictures or P pictures are reference pictures. H.264 breaks these restrictions, allowing one frame to be selected as the reference frame image from the first few frames of the current frame to perform motion prediction on the macroblock, when the multiple reference frame mode is selected. The encoder selects the best reference frame from several reference frames. The encoder is a device that compiles and converts signals (such as bit streams) or data into signals that can be used for communication, transmission, and storage. The encoder converts the angular displacement or linear displacement into an electrical signal. The former becomes a code disk, and the latter is called a code ruler. The encoder can be divided into two types, contact type and non-contact type, according to the reading method. The contact type adopts the brush output, a brush contacts the conductive area or the insulating area to indicate whether the status of the code is "1" or "0"; the non-contact type receiving sensitive element is a photosensitive element or a magnetic sensitive element. The light-transmitting area and the opaque area indicate whether the status of the code is "1" or "0", and the collected physical signals are converted into electrical signals readable by the machine code through the binary coding of "1" and "0" Used for communication, transmission and storage. To achieve the best prediction effect, the reference frame image can even be an image using bidirectional predictive coding, which greatly reduces the prediction error. another Therefore, multi-reference frame prediction can provide better prediction effects on periodic motion and background switching. 1.4 Elimination of block efficiency adaptive filters The transform coding algorithm based on block processing ignores the continuity of the object edge, and in the case of low bit rate, it is prone to block effect. In order to eliminate the blockiness introduced in the prediction and transformation process, H.264 adopts an adaptive filter for removing blockiness to smooth the edges of the macroblocks and effectively improve the subjective quality of the image. But unlike the previous standard, H.264's block elimination filter is located inside the motion estimation loop, and the image after block elimination can be used to predict the motion of other images, that is, the filtered macroblock is used for motion estimation to generate Encoding with smaller frame differences further improves prediction accuracy. 1.5 Enhanced entropy coding In the past, standard entropy coding used variable-length Huffman coding, and the code table was unified. It could not adapt to transform multi-end video content, which affected coding efficiency. Depending on the video content, H.264 uses shorter codewords to represent occurrences, and high-frequency symbols can further remove redundancy in the codestream, providing two types of entropy coding, namely context-adaptive binary arithmetic coding (CABAC) Compared with content-based adaptive variable length coding (CAVLC), CABAC has higher coding efficiency and is more complex. Under the same image quality, using CABAC to encode TV signals can reduce the code by about 10% (10% ~ 15%) Rate, the latter has a strong ability to resist bit errors. 2 Implementation of DSP platform for H.264 video codec In digital image processing, a large amount of digital signal processing must be completed, especially for the new generation of video compression coding standards such as H.264. As far as its Baseline is concerned, its decoding complexity is twice that of H.263 under the same circumstances, and its encoding complexity is three times that of H.263. To solve this high computational problem, it relies heavily on high-speed DSP Technology, and DSP processors produced using semiconductor manufacturing processes can have lower power consumption. The DM64X series chips produced by TI have ultra-high frequency, strong parallel processing capability and signal processing function, and are the ideal platform to realize H.264 encoding and decoding. The 642 series produced by TI is a dedicated DSP for multimedia applications. The DSP clock frequency is up to 600 MHz, 8 parallel computing units, and the processing capacity is 4 800 MIPS. It is based on C64X and adds a lot of peripherals Equipment and interfaces. It can be seen that DM642 is a powerful multimedia processor and a good platform for forming a multimedia communication system. The system is mainly for collecting analog video images (PAL format), then compressing it, and then sending the compressed data to the receiving end through spread spectrum, and then decompressing by the DSP after receiving the code stream at the receiving end , And then the DSP is responsible for image display, storage, etc. Therefore, the overall design must include video input / output, network and other interfaces. The design diagram is shown in Figure 2. At the sending end, the video output is converted into a digital video signal by the video A / D chip, and then input to the video port 2 of the DM642, the image acquisition is performed by the DM642, and the image data is sent to the SDRAM, and the DM642 performs real-time video image Compress and send the compressed data to the channel coding part through McBSP to complete the work of the sending end. At the receiving end, receive the compressed image data sent by the channel decoding part, and then complete the real-time decompression of the image by DM642, and send the decompressed data to SDRAM, and then send the decompressed image data to video port 0, Then the video port 0 sends the data to the video D / A to complete the real-time display of the video. The audio / video interface in Figure 2 is used as an extension. The 10 / 100Mb / s Ethernet card and USB controller peripherals are mainly for the convenience of the receiver directly transmitting digital video signals to the computer or the terminal. The power supply and reset circuit complete the circuit Board power supply and reset function. 3 DSP optimization of H.264 video codec The H.264 encoder is transplanted to the DM642 image processing platform. Since the core algorithm of H.264 not only needs to be improved in code structure, but also needs to be changed in the specific core algorithm, the encoding speed of the entire system Very unsatisfactory, can not meet the requirements of real-time application, so the system needs to be optimized from all aspects to reduce the coding time. First, the redundant code in the encoder is removed, and then the optimization work is divided into three steps: the H.264 algorithm is implemented on the PC and optimized; the DSPization of the H.264 code of the PC can be implemented on the DSP. Decoding algorithm. However, the efficiency of the algorithm implemented in this way is very low, because all the code is written in C language, and does not fully utilize the various performance of DSP. Therefore, it must be further optimized according to the characteristics of DSP itself to achieve it. H.264 video decoder algorithm real-time processing of video images, that is, H.264 DSP algorithm optimization. The optimization of DSP code is divided into three levels: project-level optimization, C program-level optimization, and assembler-level optimization. 4 Conclusion In the above environment, the decoder algorithm has been able to achieve a decoding speed of 45 ~ 60 f / s for the QCIF test sequence, and has achieved the purpose of real-time decoding. The test results show that the subjective quality of the image is better, there is no obvious block effect, and the bit rate is relatively low. In addition, the real-time performance of image coding has a certain relationship with the content of the image and the intensity of the motion. The H.264 video codec implemented on the DM642 board has the characteristics of strong functions and flexible use, and has wide application prospects. The key to the RADVISION strategy is to support H.264-SVC technology in the SCOPIA architecture, so that RADVISION's SVC-supported SCOPIA desktop system can communicate with other standard video codec technologies such as H.263 and H.264. Different devices can be connected to the same conference room, and each device can obtain the best video codec quality according to its performance. Devices that do not support H.264-SVC can still be connected via H.263 or H.264. It is believed that in the near future, video phones, video conferencing, cable TV, wireless based on H.264 algorithm and DSP processor Products such as streaming media communications will gradually enter thousands of households, and the application of video codecs on embedded processing terminals will gradually become the mainstream of applications. T Copper Tube Terminals,Non-Insulated Pin-Shaped Naked Terminal,Copper Cable Lugs Terminals,Insulated Fork Cable Spade Terminal Taixing Longyi Terminals Co.,Ltd. , https://www.txlyterminals.com