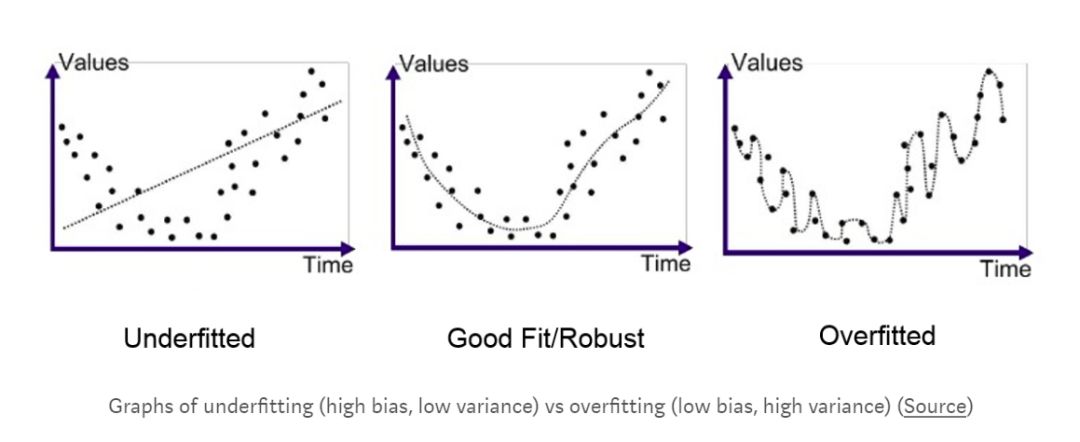

Before we formalize these two concepts, let's look at a story: Suppose you want to learn English but have no knowledge of English before, but I have heard that Shakespeare is a great British writer. If you want to learn English, of course, you will be in a library, recite his related works, and learn English with his works. After one year of study, you walked out of the library and came to New York, and greeted the first person you saw: "Hey, let the light be with you!" The man looked at him with strange eyes. You mutter "neuropathy" in your mouth. You tried it calmly and again: "Dear lady, how elegant is today?" You have once again failed, and scared the man away. When you fail three attempts, you are upset and said, "Oh, what a regret, how sad!" It is a pity, because you have committed one of the most fundamental mistakes in modeling: Over-fitting of the training set. In the science of data science, the overfit model is interpreted as a high variance and a low bias from the training set, resulting in a low generalization in the test data ( Generalization) model. To better understand this complex definition, we try to understand it as a process of trying to learn English. The model we are going to build represents how to communicate in English. Take all the works of Shakespeare as training data and use the dialogue in New York as a testing set. If we measure the performance of this model by the degree of social recognition, then the facts show that our model will not be effectively extended to the test set. But what are the variances and deviations in the model? Variance can be understood as the change produced by the model in response to the training set. If we simply memorize the training set, our model will have a high variance: it is highly dependent on the training set data. If all the works we read come from JK Rowling instead of Shakespeare, the model will be completely different. When such a model with high variance is applied to a new test set, the model will not perform well. Because the model will be lost in the absence of training set data. It is like a student who simply reviews the questions listed in the textbook, but it does not help him solve some practical problems. Bias, as a concept relative to variance, represents the strength (validity) of our assumptions based on data. In the previous example of our attempt to learn English, we based on an uninitialized model and used the author's work as a textbook for learning the language. Low deviations seem to be a positive thing, because we might have the idea that we don't need to look at our data with a biased mindset. However, we need to be skeptical about the integrity of the data. Because any natural processing process generates noise, and we can't confidently ensure that our training data covers all of this noise. So we need to understand before we start learning English. We can't master English by memorizing Shakespeare's famous books. In general, the bias is related to the degree to which the data is ignored, and the variance is related to the degree of dependence of the model and the data. In all modeling processes, there is always a trade-off between deviation and variance, and we need to find an optimal balance point for the actual situation. The concepts of bias and variance can be applied to any model algorithm from simple to complex, which is critical for data scientists. We have just learned that over-fitting models have high variance and low deviation. Then the opposite situation: What is the model of a low variance and high deviation? This is called under-fitting. Compared to previous models that closely fit the training data, an under-fitting model ignores the information obtained from the training data, making it impossible to find the intrinsic link between the input and output data. Let's explain it with an example of trying to learn English. This time we tried to make some assumptions about the model we used before, and we changed to use the complete book of Friends as the training data for learning English this time. In order to avoid the mistakes we made before, this time we make assumptions in advance: only those sentences that start with the most commonly used words --the, be, to, of, and a — are important. When learning, we don't think about other sentences, and we believe that this can build a more efficient model. After a long training, we once again stood on the streets of New York. This time, our performance was relatively good, but others still could not understand us. In the end, we ended up failing. Although we have learned some English knowledge and are able to organize a limited number of sentences, we cannot learn the basics and grammar of English because of the high deviations from the training data. Although this model is not affected by the high variance, it is too overkill and not fit enough compared to previous attempts! Excessive attention to the data can lead to overfitting, and neglect of the data can lead to under-fitting, so what should we do? There must be a way to find the best balance! Fortunately, in data science, there is a good solution called "Validation." In the example above, we only used one training set and one test set. This means that we can't know the quality of our model before actual combat. Ideally, we can use a simulation test set to evaluate the model and improve the model before the actual test. This simulation test set is called the validation set and is a very critical part of the model development work. After two failed English studies, we learned to be smart, this time we decided to use a test set. We use Shakespeare's work and Friends this time, because we have learned from past experience that more data can always improve this model. The difference is that after the training, we don't go straight to the street. We first find a group of friends, meet with them every week, and evaluate our model in the form of talking with them in English. In the first week of the beginning, because our English is still very poor, it is difficult for us to integrate into the dialogue. However, all of this is simply being modeled as a validation set, and once we realize the error, we can adjust our model. Finally, when we are able to adapt and control the dialogue with our friends, we believe that it is time to prepare for the test set. So, we once again boldly went out, this time we succeeded! We are very comfortable with talking to others in real situations, thanks to a very critical factor: the validation set, which improves and optimizes our model. English learning is just a relatively simple example. In many real-world data science models, given the possibility of overfitting on a validation set, a very large number of validation sets are often used! Such a solution is called corss-validation, which requires us to split the training set into multiple different subsets or use multiple validation sets with enough data. The concept of cross-validation covers all aspects of the problem. Now when you come across a problem related to over-fitting vs. under-fitting, bias vs. variance, you will come up with a conceptual framework that will help you understand And solve this problem! Data science seems complicated, but it is actually built through a series of basic modules. Some of these concepts have already been mentioned in this article, they are: Overfitting: over-reliance on training data Under-fitting: unable to obtain the existence relationship in the training data High variance: A model produces dramatic changes based on training data High deviation: a model hypothesis that ignores training data Over-fitting and under-fitting result in low generalization of the test set Correcting the model with a validation set avoids under-fitting and overfitting caused by actual processes Data science and other areas of technology are in fact related to our daily lives. With the help of some examples related to reality, we can explain and understand these concepts very well. Once we understand a framework, we can use technology to handle all the details to solve the problem. Guangzhou Yunge Tianhong Electronic Technology Co., Ltd , https://www.e-cigarettesfactory.com