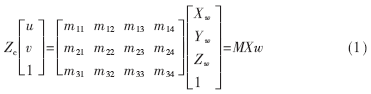

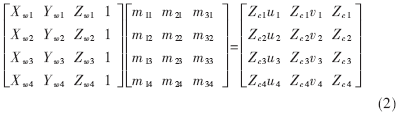

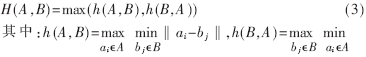

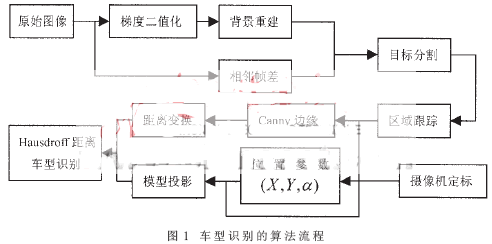

In modern traffic management and road planning, traffic flow and the type and speed of passing vehicles are important parameters. The methods for automatically obtaining these data can be roughly divided into two categories: one is to obtain the parameters of the vehicle itself by using sensors such as piezoelectric, infrared, and toroidal magnetic induction coils. The tracking rate of such methods is high, but it is easy to damage and the installation is not convenient. There is also a method based on image processing and pattern recognition that overcomes the limitations of the previous method. Due to the advancement of image processing and recognition technology and the significant improvement in hardware cost performance, systems with certain practical value have emerged. The use of these systems proves that the image processing method for identifying the vehicle model is becoming more and more mature, the environment adaptability is strong, and the work can be stable for a long time, but the calculation amount is large, and the recognition correct rate is not as high as the induction coil and the laser card reading method. The research in this paper belongs to the latter. It monitors the road surface with a single static camera installed at a high place, and uses the method of motion segmentation and model matching to detect and count the traffic information of multiple lanes. This article refers to the address: http:// The entire identification process is divided into three steps: segmentation, tracking and vehicle type determination. The segmentation of the moving target often uses the frame difference method. In the monitoring occasion, the camera is mostly fixed, the background is basically unchanged or the change is slow, the background image can be gradually taken out from the image sequence, and then the target area is detected by the frame difference method, and the stationary target can also be detected. Since the binary edge image is utilized in the recognition process, the input image is subjected to gradient binarization processing in image segmentation. The determination of the mapping relationship between the three-dimensional space and the two-dimensional image plane is calculated by camera calibration based on the pinhole model. For the tracking of the target area, the matching method of the region feature vector is adopted to reduce the amount of calculation. Since the image processing method is difficult to extract the parameters of the vehicle itself such as the number of wheels and the wheelbase, a method of matching the image on the image and matching the edge of the vehicle is generally employed in the image model recognition. 1 Background reconstruction and image segmentation Since the camera is fixed and the background changes slowly, the background image can be gradually recovered using the image sequence. The basic principle is: monitor each pixel. If the gray level does not change significantly in a long time, the pixel is considered to belong to the background area, and the gray value of the pixel is copied to the background buffer, otherwise it belongs to the foreground. Area [1]. Due to the influence of illumination and vehicle shadows, the background image recovered by this method has a large noise. Therefore, the original input image was subjected to gradient binarization in the experiment, and then the background reconstruction was performed. This can reduce shadow interference and speed up background reconstruction. Since the recognition utilizes edge information, the gradient has no effect on the subsequent recognition process. After the background boundary image is obtained, the target of interest can be segmented by the frame difference method. However, if the target area and the background boundary coincide (the value is "1"), the target area is erroneously determined as the background area (the value is "0") after the subtraction. In order to reduce the error decision area, this paper refers to the binarized frame difference fdmask of two adjacent frames in the segmentation. The decision criterion is as follows: if a pixel in the fdmask is "0", the input image and the corresponding pixel of the background image are subtracted; Otherwise copy the corresponding pixel value in the input image directly. The segmentation result is separated by noise elimination, morphological smoothing boundary, seed filling, region labeling and other subsequent processing. 2 camera calibration In model matching, it is necessary to recover the target three-dimensional information from the two-dimensional image, and simultaneously project the three-dimensional model onto the image plane, so the projection relationship matrix of the three-dimensional space to the image plane must be calculated. This process is the camera calibration. In this paper, the camera calibration method based on pinhole model is adopted. The basic principle is to solve the linear equations and calculate the elements in the perspective projection matrix by using the point coordinates of a given set of three-dimensional worlds and the coordinates of these points in the image. 2]. The perspective projection matrix is ​​as follows: Where: (u, v) is the image coordinate, (Xw, Yw, Zw) is the three-dimensional coordinate, M is the projection matrix, and Zc is the projection distance of the vector from the point to the camera lens in the three-dimensional space on the main optical axis. It is required to solve the various elements of M. According to the literature [2], a projection relationship of 6 points is required to form a 12-order equation group. Usually, the equations are not independent, there is no unique solution, and the error of approximate calculation is large. After the deformation on the basis of (1), the 12th order equation is divided into three 4th order equations. Only the projection relationship of 4 points is needed, and the order of the equations is only 4 orders, which can effectively avoid the singular matrix. Find the only solution. From equation (1), we can get: In addition, in addition to the coordinates of the four sets of points, it is necessary to determine the horizontal and vertical tilt angle of the main optical axis of the lens. 3 Vehicle tracking and classification After the area is divided, the area tracking is performed next, and the target chain of the adjacent two frames is used to establish a target chain in the image sequence, and the whole process of tracking the target from the monitoring range to the departure monitoring range is tracked. The first step is to determine the matching criteria. Common image matching methods are Hausdorff distance matching and image cross-correlation. Both methods require pixel-by-pixel calculations. In order to reduce the amount of calculation, a region feature tracking method is employed. The characteristics of the target area include the area centroid coordinates, the area enclosing rectangle, the area moving speed and the moving direction, and the area area. The matching criterion in this paper adopts two assumptions: the area corresponding to the same target is similar in the adjacent two frames; the centroid of the same target in the previous frame plus the motion velocity obtained by the centroid prediction value and the next frame The regional centroid distance is similar [3]. The tracking process is as follows: (1) The respective regions of the first frame are treated as different targets, and the target chain is started for each target region. (2) According to the decision criterion, if a region in a target chain finds a matching region in the current frame, the region feature in the target chain is updated with the found matching region feature. (3) If the area of ​​the current frame area and the area of ​​the target chain differ greatly at the position where the centroid prediction value is located, it can be considered that a merge or split phenomenon has occurred. The rectangle in the target chain is surrounded by a rectangle. In the present frame, the rectangle is covered by several regions. If there is more than one region, splitting is considered to occur. Start a new target chain for new areas where splitting occurs. Similarly, for the enclosing rectangle of the frame region, the search for the rectangle covers several regions in the target chain. If there is more than one, it is considered that a merge phenomenon occurs, and the new target chain is started by using the merge region, and those merged are terminated at the same time. The target chain of the region. (4) For the region in the target chain, if there is no region matching the frame, the disappearance is considered to have occurred. The target chain does not terminate immediately, and the target chain is terminated only after a few frames have not been found. (5) Find out if there is still a new entry area in this frame, and if it exists, start a new target chain. In this way, the target in the image sequence can be quickly tracked while obtaining the average speed of the vehicle in the surveillance range. When counting, only the target appears in a continuous number of frames to be considered a true target area. Only the target does not appear to appear disappearing after consecutive frames, so the counting errors caused by temporary disappearance can be eliminated. Vehicle classification is a very complicated issue. Image processing methods are difficult to obtain the parameters of the vehicle itself such as the number of wheels and the wheelbase. Therefore, the image recognition model usually adopts the model matching method. Most of the existing research is to extract several straight edges of the vehicle and then match the edges of the model with the lines. Since the calculation of the straight line itself in the image is quite large, this paper does not extract the vehicle edge line, but directly uses the overall result of Canny edge detection to match the model. There is a large deformation between the Canny edge and the edge of the model. The Hausdorff distance matching is not sensitive to the deformation, so it is suitable to use the Hausdorff distance as the matching criterion [4]. There are two sets of finite point sets A={a1,...,ap} and B={b1,...,bq}, then the Hausdorff distance between them is defined as: Where: h(A,B)=max min‖ai-bj‖,h(B,A)=max min aiA bjB bjB aiA ‖bj-ai‖, h(A, B) is called the directed Hausdorff distance from A to B, which reflects the degree of mismatch between A and B. The meaning of h(B, A) is similar to h(A, B). In the specific calculation of the Hausdorff distance, the method of distance transformation is usually adopted. The car classification steps are as follows: (1) On the basis of the segmentation result, the Canny operator edge detection is performed on the target region [5], and only the edge of the segmented target region is processed, and the amount of calculation is reduced. (2) For the Canny edge, the distance transform image is obtained by serial distance transformation. The gray value of each pixel of the distance transformed image is equal to the closest distance of the pixel to the target edge. (3) For each segmentation target, the three-dimensional information of the vehicle is restored, and only the length and the width are calculated. Since a point on the plane of the two-dimensional image corresponds to a series of points of different depths in the camera coordinates, when restoring the information of the point in the world coordinates from a point on the image, first, a component of the point in the world coordinate value is given. Reduce the uncertainty (there is some error in the recovered value, usually giving the Z-direction height value Zw). (4) While calculating the length and width of the target area, the position (X, Y) of the vehicle chassis center on the ground can be obtained, and the angle α of the vehicle on the ground can be determined according to the speed direction. Using the three-dimensional model data of the vehicle itself and (X, Y, α), through the perspective projection of the equation (1), the blanking process, the projection of the vehicle model on the image plane can be determined. (5) After the target enters the specified area, the projected image is used as a template to move the projected image on the distance transformed image. At each position, the pixel value of the distance transformed image covered by the model outline is obtained at each position of the model projection image. And, with this sum value as the degree to which the current model matches the actual vehicle at that location. The minimum value of the matching degree of the current model at each position is taken as the actual matching degree between the current model and the vehicle, and the minimum value is divided by the number of pixels of the model contour, that is, the Hausdorff distance between the model and the vehicle. For each model, the Hausdorff distance between them and the vehicle is obtained separately, and the model corresponding to the minimum value is the model recognition result. In order to reduce the amount of calculation during the experiment, the search method uses a three-step search method. 4 Experimental results The 352×288 video image used in this experiment comes from a traffic scene clip taken by Hangzhou Tianmushan Road with a single fixed CCD camera. The main algorithm is implemented in C language on Trimedia1300 DSP. During the image segmentation process, more gradients, noise reduction, padding and labeling operations are performed. It takes about 0.3s to process one frame on average. The whole process of the algorithm flow is shown in Figure 1. Experiments show that the difference detection between the extracted background and the current frame is more accurate. For lighter shadows, the gradient binarization method can partially eliminate shadow effects. Since only the edge change portion is monitored, the background reconstruction speed is much faster than the direct use of the gray image reconstruction background, and the interference is also small. After the gradient binarization process, the background reconstruction needs only 150-200 frames, and without gradient binarization, there is still no good background after thousands of frames, and the point noise and cloud-like blur are more serious. The results of the tracking count show that using the centroid and area as features, the target in the image sequence can be quickly tracked, and the counting accuracy is up to 95%. The counting error mainly lies in the fact that the splitting and combining process caused by the occlusion does not completely reflect the motion of the target, and the merged area is regarded as the newly appearing area. If the merged region is split again, the split region will be treated as a new region, causing a large count. In order to simplify the tracking algorithm, the experiment only performs tracking matching between two adjacent frames, so the ability to handle splitting and merging is not strong. If tracking is performed between multiple frames, the effect will be better, but the algorithm is more complicated. For vehicles with large differences in size, such as buses and cars, it is easy to separate the information according to the length and width, and there is no need to match the model at the back. Therefore, the test in this paper is mainly aimed at vehicles that are not very different in size on the street, and they are divided into cars, light trucks and vans. The test proves that the Canny edge can be directly used to determine the vehicle type according to the Hausdorff distance matching. Since the edge line is not extracted one by one to match the model contour, the calculation amount is greatly reduced, and the algorithm is simple to implement. Due to the relatively small change in the size of the car, the recognition rate is the highest, up to 90%; the light truck is the second; the recognition rate of the van is the lowest, about 50~60%, and the wrong part is mainly recognized as a car. The main reason is the van. The size varies greatly, and one of the shortcomings of the model matching method is also here. To improve recognition rate, model segmentation is a must. In this test, the camera is installed in front of the street. Since the most prominent shape of the vehicle is the side profile, if the camera is installed next to the street to take a side image of the vehicle, it can be considered that the recognition effect should be better. In addition, the edge effect of the Canny operator is not very good, the noise is relatively large, and it also affects the judgment result. If the Hough transform is used to extract the straight line at the edge of the vehicle, the amount of calculation is large. If the shadows are severe, special shadow removal is also required. These are the problems to be solved in the next step. We are the dial thermometer manufacture and supplier in China, we have dial thermometer, Bimetal Thermometer, Digital Thermometer, solar thermometer, the measuring rang from -40℃ to 600℃, the diameter of thermometer from 30mm to 250mm, it use to heating technology, industrial and process industrial, etc. The material are SS304, SS316. Our bimetal is import from Japan. It`s more accurate and stable. The stem can be customize. Spiral Bimetallic Thermometer,Industrial Bimetal Thermometer,Digital Clinical Thermometer,Handheld Digital Thermometer Changshu Herun Import & Export Co.,Ltd , https://www.herunchina.com