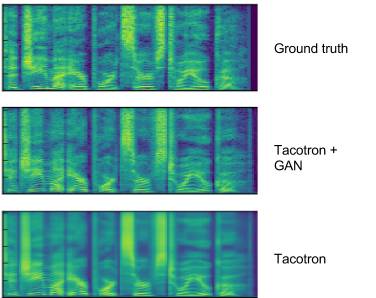

Training a neural network usually uses the loss function to understand how far the network generated results are from the target results. For example, in an image classification network, the loss function usually penalizes the wrong classification; if the network mistakes the dog for a cat, it will cause a large loss. However, not all problems can be easily defined with loss functions, especially those involving human perception, such as image compression or text-to-speech systems. Generating Confrontation Networks (GANs) as a machine learning tool has improved applications in many areas, including generating images with text, generating super-resolution images, teaching robots to learn to grasp, and providing solutions. However, these new theoretical and software engineering challenges are difficult to keep up with the pace of GANs research. Generate a process of continuous improvement of the network. Random noise is generated at the beginning, and eventually the network learns to generate MNIST digital sets. To make the build-off network easier to experiment with, Google developers have open sourced a lightweight library, TFGAN, which makes GAN's training and evaluation process easier. At the same time, TFGAN provides well-tested loss functions and evaluation metrics, and provides easy-to-use examples that highlight TFGAN's powerful expressiveness and flexibility. At the same time, Google has released a tutorial that includes a high-level API that quickly gets a model that is trained on your data. This figure shows the effect of the resistance loss on image compression. The top layer is the image patch in ImageNet. The middle row is the result of compression and decompression of the neural network trained on traditional losses. The bottom line shows two different outcomes of training on traditional losses and resistance losses. It can be seen that the image generated against the network loss is clearer and more detailed, but there is still a certain gap with the original image. In addition, TFGAN provides simple functions that cover most GANs cases, so it takes only a few lines of code to get the model running on your data. But it is built in a modular way to support more GANs designs. Developers can use any module for loss, evaluation, and training functions. Most text-to-speech (TTS) neural network systems produce an overly smooth map. When the generation of the countermeasure network is applied to the Tacotron TTS system, the artificial traces can be effectively eliminated. TFGAN's lightweight design allows it to be used with other frameworks or with native TensorFlow code. The GAN model written with TFGAN can be easily improved in the future, and users can choose from a large number of realized losses and functions without rewriting the model. Finally, TFGAN's code has been thoroughly tested, and users don't have to worry about frequent numerical or statistical errors. 1.00Mm Wafer Connector,1.0Mm Single Row Smt Wafer Connector,1.0Mm Smt Wafer Connector,1.0Mm Dual Row Smt Wafer Connector Shenzhen CGE Electronic Co.,Ltd , https://www.cgeconnector.com