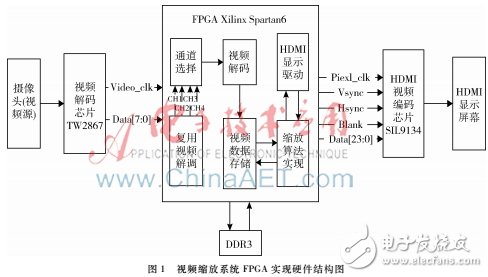

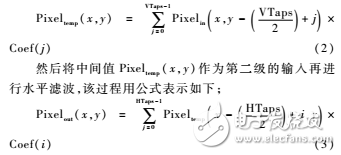

Abstract : The traditional interpolation algorithm has poor processing performance for details when scaling video images, especially for outputting high-resolution video images. The multi-phase interpolation algorithm is used to realize video image scaling. The principle of the algorithm and the hardware structure of the algorithm are mainly described. The hardware circuit control part uses Xilinx's Spartan6 series FPGA chip, the system can realize the video signal collected by the four-channel camera from any channel to the resolution display of 1 920x1 080@60 Hz, the result shows the real-time performance of the output video image. The details are kept good. Video image scaling can also be called video image resolution conversion, video image resampling, video image scaling, etc. It is one of the key technologies in digital video image processing technology, directly affecting video image output quality effects and vision. Experience. At present, video image scaling technology is widely used in medical imaging, engineering, multimedia, video conferencing and other fields [1]. Traditional video image scaling interpolation algorithms include nearest neighbor interpolation, bilinear interpolation, and bicubic interpolation. Other interpolation algorithms include edge interpolation, B-spline interpolation, and adaptive interpolation. The algorithm based on linear model is applied. High-frequency signals are superimposed on the low-frequency signal area during image processing, resulting in aliasing of the output video image, especially when the video image output resolution is high, and the detail processing will bring a poor visual experience. The multi-phase interpolation algorithm is also a commonly used video image scaling method, which has better performance in detail maintenance than the traditional interpolation algorithm, and is widely used in the industry. The basic principle of video image scaling is to convert an original image with a resolution of (M, N) into a target image with a resolution of (X, Y). The mathematical definition can be described as: known (M, N) pixels, Pixelin (i, j) (i = 1, 2, ..., M; j = 1, 2, ..., N), where i, j For the pixel coordinates of the original image, Pixelin(i,j) is the original image pixel value. Now I hope that through a mathematical relational mapping, I can use the known pixel points to find the output pixel point, Pixelout(x,y)(x=1,2,...,X; y=1,2,...,Y), where x, y is the pixel point coordinate of the target image, and Pixelout(x, y) is the target image pixel value. Then the pixel function corresponding function relationship of the input and output images can be expressed as: Pixelout(x, y)=f(i,j, Pixelin(i,j)), and the essence of multi-phase interpolation is to solve the target image according to the mapping relationship of the function. Pixel values. According to the above analysis, the target image pixel value cannot be directly obtained from the original image, but needs to be calculated by using position coordinates, pixel values ​​and the like related to the original image. Due to the local correlation of the image content, the pixel value of the output target image and the input original image pixel value adjacent to the corresponding spatial position are highly correlated, and the pixel value farther from the spatial position is less correlated. The general video image scaling process is a typical two-dimensional filtering process, which can be expressed by formula [4] as follows: Pixelout(x,y)= ∑HTaps-1i=0∑VTaps-1j=0Pixelinx-HTaps2+i,y-VTaps2+j&TImes; Coef(i,j)(1) Where HTaps and VTaps are the number of taps in the horizontal and vertical directions of two two-dimensional filters, Coef(i,j) is the coefficient of the corresponding filter, which represents the input pixel value of the participating operation and the output pixel value. Weight size. Its value determines the effect of the input pixel value on the output pixel value and directly determines the effect of the scaling. The determination of the coefficient depends on the filter low pass and anti-aliasing requirements. Figure 1 Video Scaling System FPGA Implementation Hardware Structure The two-dimensional structure is more complicated in data operation. In order to simplify the operation, the two-dimensional filter is generally split, and two one-dimensional filters are cascaded to realize two-dimensional characteristics, that is, horizontal. Filters and vertical filters. First, the first stage performs vertical filtering to output the intermediate value Pixeltemp(x, y), which is expressed by the following formula: According to the above analysis, the number of multipliers required before and after the simplified operation is reduced from (VTaps&TImes; HTaps) to (VTaps+HTaps), and the amount of calculation is greatly reduced. This method is very advantageous for realizing hardware systems with high real-time requirements. At the same time, this idea is also the basic model of hardware implementation of various video image scaling algorithms, that is, scaling processing in the horizontal direction and the vertical direction respectively, so that the problem becomes an analysis processing process of the one-dimensional signal sampling rate change. The hardware structure of the system is shown in Figure 1. The multi-phase interpolation scaling algorithm is verified using Xilinx's Spartan6 series FPGA chip. The system uses four analog cameras to capture the video signal as the input source. The TW2867 multi-channel video decoding chip converts the analog video signal into a digital video signal and sends it to the FPGA chip for data processing. The FPGA first performs certain preprocessing on the input data signal. Since the captured image does not match the image refresh rate of the output display, in order to ensure the matching of the read/write rate during data processing, it is necessary to write the image data into DDR3 and then read the data for scaling processing and output, and the output signal needs to comply with the HDMI standard timing. Finally, the SIL9134 video encoding chip encodes the output data signal into a video stream for transmission to the screen display and views the output. 2.1 related chip introduction 2.1.1 Video Decoder Chip Mini Car Vacuum Cleaner,Cordless Car Vacuum Cleaner,Car Cordless Vacuum Cleaner,Rechargeable Car Vacuum Cleaner Ningbo ATAP Electric Appliance Co.,Ltd , https://www.atap-airfryer.com