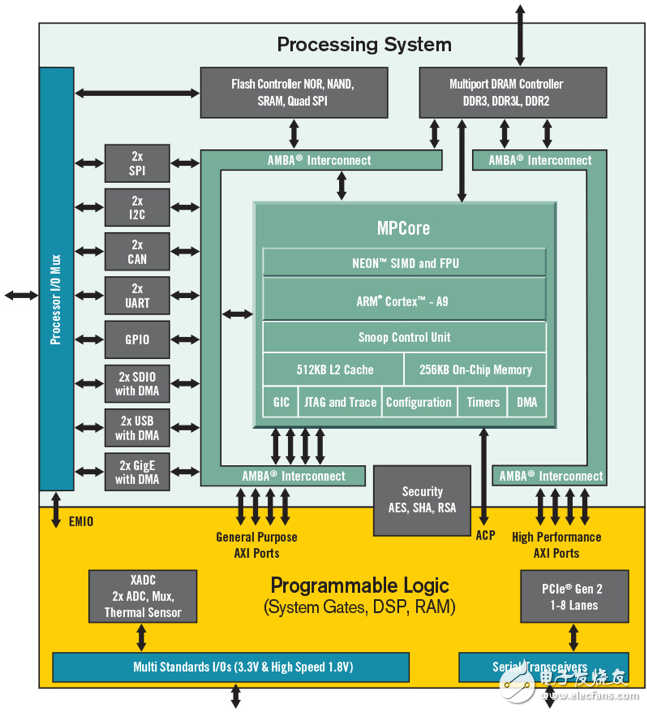

Compared with the previous self, the current FPGA is no longer just a collection of lookup tables (LUT) and registers, but has far exceeded the exploration of the current architecture and provides a design framework for future ASICs. This series of devices now includes everything from basic programmable logic to complex SoCs. In various application areas (including automotive, AI, enterprise networks, aerospace, defense and industrial automation, etc.), FPGAs can enable chip manufacturers to implement systems in a way that can be updated when necessary. This flexibility is critical in new markets where agreements, standards and best practices are still evolving and ECOS is required to remain competitive. Louie de Luna, director of marketing at Aldec, said that this is why Xilinx decided to add an Arm core to its Zynq FPGA to create an FPGA SoC. "The most important thing is that the vendor has improved the tool flow. This has generated a lot of interest in Zynq. Their SDSoC development environment looks like C, which is good for developers because applications are usually written in C Written in languages. So they use software features and allow users to assign those features to hardware. " Some of these FPGAs are not just SoC-like. They are SoCs themselves. "They may contain multiple embedded processors, dedicated computing engines, complex interfaces, large-capacity memory, etc.," said Muhammad Khan, OneSpin SoluTIons comprehensive verification product expert. "System architects plan and use the available resources of FPGAs as they do for ASICs. Design teams use synthesis tools to map their SystemVerilog, VHDL, or SystemC RTL code to basic logic elements. For most design processes, That said, the difference between effectively targeting FPGAs and targeting ASICs or fully customized chips is shrinking. " Ty Garibay, CTO of ArterisIP, is very familiar with this evolution. "Historically, Xilinx began to take the path of Zynq in 2010. They defined a product that incorporated Arm SoC's hard macros into existing FPGAs," he said. "Then Intel (Altera) hired me to do basically the same thing. The value proposition is that SoC subsystems are what many customers want, but because of the characteristics of SoCs, especially processors, they are not suitable for synthesis on FPGAs. The function of embedded in the actual programmable logic is prohibitive because it uses almost the entire FPGA for the function. But it can be used as a small part or a small part of the entire FPGA chip as a hard function. You give up In order to provide SoC with the ability to truly reconfigurable logic, but it can be programmed as software to change the function in this way. This means that there can be software programmable functions, hard macro and hardware programmable functions in the structure, and they can work together, he said. "There are some pretty good markets, especially in the field of low-cost automotive control. In any case, traditionally, a medium-performance microcontroller-type device is placed next to the FPGA. The customer will only say that I just put the entire function into the FPGA On the chip's hard macro, to reduce board space, reduce BOM, and reduce power consumption. " This is in line with the development of FPGAs in the past 30 years. The original FPGAs were only programmable structures and a bunch of I / O. Over time, memory controllers have been hardened along with SerDes, RAM, DSP and HBM controllers. Garibay said: "FPGA vendors have continued to increase the chip area, but also continue to increase more and more hard logic, these logic is widely used by a considerable proportion of the customer base." "What happened today is to extend it to software Programmable side. Most of the things added before this ARM SoC are different forms of hardware, mainly related to I / O, but also including DSP, it is meaningful to conserve programmable logic gates by strengthening them because there are Sufficient plan effectiveness. " A point of view This has basically turned the FPGA into a Swiss army knife. "If you shorten the time, it's just a bunch of LUTs and registers, not doors," said Anush Mohandass, vice president of marketing and business development, NetSpeed ​​Systems. "They have a classic problem. If you compare any general-purpose tasks with their application-specific versions, then general-purpose computing will provide greater flexibility, while application-specific computing will provide some performance or efficiency advantages. Xilinx and Intel (Altera) are trying to align themselves more and more. They noticed that almost every FPGA customer has a DSP and some form of computing. So they joined the Arm core, they joined the DSP core, they joined All the different PHYs and common things. They reinforce this, which makes the efficiency higher and the performance curve better. " These new functions have opened the door for FPGAs to play an important role in various emerging and existing markets. "From a market perspective, you can see that FPGAs will definitely enter the SoC market," said Piyush SancheTI, senior marketing director of Synopsys. "Whether you are making an FPGA or a mature ASIC is economical. These lines are beginning to blur, and of course we are seeing more and more companies-especially in certain markets-are making FPGA production more economical. Production area. " Historically, FPGAs have been used for prototype manufacturing, but for production purposes, it is limited to aerospace, defense, and communications infrastructure markets, SancheTI said. "Now the market is expanding to automotive, industrial automation and medical equipment." AI, this is a booming FPGA market Some companies using FPGAs are system suppliers / OEMs who want to optimize their IP or AI / ML algorithm performance. "NetSpeed's Mohandass said:" They want to develop their own chips, and many of them start to do ASICs, which may be a little scary. "They may also not want to spend $ 30 million in wafer costs to obtain chips. For them, FPGAs are an effective entry point, they have unique algorithms, their own neural networks, and they can see if it can provide The performance they expect. " Stuart Clubb, senior product marketing manager for Catapult HLS synthesis and verification at Mentor of Siemens, said that the current challenge for AI applications is quantification. "What kind of network do I need? How do I build this network? What is the memory architecture? Starting from the network, even if you have only a few layers and you have a lot of data with many coefficients, it will soon be converted into millions of coefficients And storage bandwidth becomes very scary. No one really knows what is the correct architecture. If the answer is not known, you wo n’t jump in and build an ASIC. " In the corporate network field, the most common problem is that password standards seem to be constantly changing. Mohandass said: "Instead of trying to build an ASIC, it is better to put it in the FPGA and make the crypto engine better." "Or, if you do any kind of packet processing on the global network, FPGA still provides you with more Flexibility and more programmability. This is where flexibility comes into play, and they already use it. You can still call it heterogeneous computing, and it still looks like an SoC. " New rules With the use of next-generation FPGA SoCs, the old rules no longer apply. "Specifically, if you are debugging on a circuit board, you are doing something wrong," Clubb pointed out. "Although debugging on the development board is considered to be a lower cost solution, it can be traced back to the early stage of being able to say: 'It is programmable, you can place an oscilloscope on it, you can view and See what happened. But now it says: 'If I find an error, I can fix it, write a new bitstream in one day, then put it back on the board, and find the next error,' This is crazy. This is a lot of mentality you see in areas where employee time is not considered cost. Management will not buy simulators or system level tools or debuggers because 'I just pay to let this person finish Work, and I will scream him until he works hard. " He said that this kind of behavior is still very common, because there are enough companies to make everyone down to earth with a 10% annual decline. However, FPGA SoC is a real SoC and requires strict design and verification methods. "The fact that programming is programmable does not really affect design and verification," Clubb said. "If you make an SoC, yes, you can follow some of the" Lego "projects I heard from customers. This is a block diagram method. I need a processor, a memory, a GPU, some other parts, a DMA memory Controller, WiFi, USB and PCI. These are the 'Lego' blocks you assemble. The trouble is that you have to verify their work and they work together. " Nevertheless, FPGA SoC system developers are quickly catching up with the SoC systems that their verification methods are focused on. "They are not as advanced as traditional chip SoC developers, their processing idea is 'this will cost me 2 million dollars, so I'd better be prepared' because the cost of [using FPGA] is lower," Clubb Say. "But if you spend $ 2 million to develop an FPGA and you get it wrong, now you will spend three months fixing these bugs, but there are still problems to be solved. How big is the team? How much will it cost? What are the penalties? These are very difficult to quantify costs. If you are in the consumer field, it is almost impossible for you to care about how to use FPGAs during Christmas, so this has a different priority. In custom chips Complete the overall cost and risk of the SoC in the middle, and pull the trigger. And it will say: 'This is my system, I'm done', you can't see that much. As we all know, this industry is being integrated, and the big chips are big names There are fewer and fewer players. Everyone must find a way to achieve this, and these FPGAs are achieving this goal. " New compromise options SancheTI said that it is not uncommon for engineering teams to design their intentions so that their choices are open to target devices. "We have seen many companies create and validate RTL, and they almost do n’t know if they are going to make FPGAs or ASICs, because many times this decision may change. You can start with FPGAs, if you reach a certain number, the economy may have Conducive to debugging ASIC. " This is especially true for today's AI application space. Mike Gianfagna, vice president of marketing at eSilicon, said: "The technology to accelerate AI algorithms is developing." Obviously, artificial intelligence algorithms have been around for a long time, but now we suddenly become more complicated in how to use them, and in near real time The ability to run them at a speed is very amazing. It starts with the CPU and then moves to the GPU. But even the GPU is a programmable device, so it has certain versatility. Although the architecture is good at parallel processing, it is very convenient because it is the entire content of graphics acceleration, because this is the entire content of AI. To a large extent it is good, but it is still a universal method. So you can get a certain level of performance and power consumption. Some people will turn to FPGAs next, because you can position circuits better than using GPUs, and performance and efficiency are improved. ASIC is the ultimate in power consumption and performance, because you have a completely customized architecture that can fully meet your needs, no more, no less. This is obviously the best. " Artificial intelligence algorithms are difficult to map to chips because they are in an almost constant state. So at this point, making a fully customized ASIC is not an option, because it has expired when the chip is shipped. "FPGAs are very good for this, because you can reprogram them, so even if it is expensive, it will not be outdated, and your funds will not be floated," Gianfagna said. Here are some custom memory configurations, as well as certain subsystem functions, such as convolution and transposed memory, these functions can be used again, so although the algorithm may change, some blocks will not change and / or again and again Use once. With this in mind, eSilicon is developing software analysis capabilities to view AI algorithms. The goal is to be able to select the best architecture for specific applications more quickly. "FGPA gives you the flexibility to change the machine or engine, because you may encounter a new network, submitting an ASIC is very risky, in this sense, you may not have the best support, so you There can be such flexibility, "said Deepak Sabharwal, vice president of intellectual property engineering at eSilicon. "However, FPGAs are always limited in terms of capacity and performance, so FPGAs cannot really meet product-level specifications, and eventually you will have to go to ASIC." Embedded LUT Another option that has made progress over the past few years is the embedded FPGA, which integrates programmability into the ASIC, while adding the performance and power advantages of the ASIC to the FPGA. Geoff Tate, CEO of Flex Logix, said: "FPGA SoCs are still mainly dealing with FPGAs with relatively small chip areas." In the block diagram, the ratio looks different, but in the actual photos, it is mainly FPGAs. But there is a class of applications and customers. The correct ratio between FPGA logic and the rest of the SoC is to have a smaller FPGA, making their RTL programmability a more cost-effective chip size. " This method is looking for traction in areas such as aerospace, wireless base stations, telecommunications, networking, automotive, and visual processing, especially artificial intelligence. "The algorithm changes so fast that the chips are almost outdated when they come back," Tate said. "With some embedded FPGAs, it allows them to iterate their algorithms faster." Nijssen said that in this case, programmability is critical to avoid remaking the entire chip or module. Debug design As with all SoCs, understanding how to debug these systems and build instruments can help you discover problems before they are discovered. "As system FPGAs become more like SoCs, they need the development and debugging methods expected in SoCs," said Rupert Baines, CEO of UltraSoC. There is (perhaps naively) thinking that because you can see anything in the FPGA, it is easy to debug. This is correct at the bit level of the waveform viewer, but it does not apply when the system level is reached. The latest large FPGAs are clearly system-level. At this point, the waveform-level view you get from the arrangement of bit detector types is not very useful. You need a logic analyzer, a protocol analyzer, and good debugging and tracing capabilities of the processor core itself. " The size and complexity of FPGAs require verification procedures similar to ASICs. Advanced UVM-based test platforms support simulation, which is usually also supported by simulation. Formal tools play a key role here, from automatic design checks to assertion-based verification. Although it is true that FPGAs can be changed faster and cheaper than ASICs, the difficulty of detecting and diagnosing errors in large SoCs means that they must be thoroughly verified before entering the laboratory, OneSpin's Khan said. In fact, in one area, the verification requirements for FPGA SoCs may be higher than the requirements for ASIC equivalence checks between RTL inputs and synthesized netlists. Compared with the traditional ASIC logic synthesis flow, the FPGA refinement, synthesis and optimization phase usually make more modifications to the design. These changes may include moving logic across cycle boundaries and implementing registers in the memory structure. Khan added that a thorough sequential equivalence check is critical to ensure that the final FPGA design still meets the original designer's intent in RTL. In terms of tools, there is room to optimize performance. "With embedded vision applications, many of which are written for Zynq, you might get 5 frames per second. But if you accelerate on hardware, you might get 25 to 30 frames per second. This is for new devices The road is flat. The problem is that the simulation and verification of these devices is not simple. You need the integration between software and hardware, which is difficult. If you run everything in the SoC, it is too slow. Each simulation may require five to Seven hours. If you collaborate on simulations, you can save time, "Aldec ’s de Luna said. In short, the same types of methods used in complex ASICs are now being used in complex FPGAs. As these devices are used for functional safety type applications, this becomes more and more important. "This is the purpose of formal analysis to ensure that there are wrong propagation paths, and then verify these paths," said Adam Sherer, Cadence's director of marketing. "These things are very suitable for formal analysis. Traditional FPGA verification methods do make these types of verification tasks almost impossible. It is still very popular in FPGA design, assuming that it is very fast and easy to perform hardware tests at the system speed Run, and only need to perform a simple simulation level for integrity check. Then you program the device, enter the laboratory and start running. This is a relatively fast path, but the observability and controllability in the laboratory Extremely limited. This is because it can only probe based on data from inside the FPGA to the pins so that you can see them on the tester. " Dave Kelf, chief marketing officer of Breker Verification Systems, agrees. "This has caused an interesting shift in the way these devices are verified. In the past, by loading the design onto the FPGA itself and running it on the test card in real time, as many small devices as possible could be verified. With SoC and software driven design The emergence of this, it can be expected that this "self-designed prototype" verification method may be applicable to software-driven technology, and may be applicable to certain stages of the process. However, it is very complicated to identify problems and debug them during the prototype design process. This The early verification stage requires simulation, so the SoC FPGA looks more and more like an ASIC. Considering this two-stage process, the versatility between them makes the process more efficient, and includes common debugging and test platforms. Portable incentives and other new Progress will provide this versatility and, in fact, make SoC FPGAs easier to manage. " in conclusion Looking to the future, Sheher said that users are seeking to apply the more stringent processes currently used in the ASIC field to FPGA processes. "There is a lot of training and analysis, and they want more technology in FPGAs to be used for debugging to support this level," he said. "The FPGA community tends to lag behind existing technologies and tend to use very traditional methods, so they need to be trained and understood in terms of space, planning and management, and traceability of requirements. Those elements from the SoC flow are definitely in FPGAs It is not necessary that the FPGA itself drives it as much, but these industry standards in the final application are driving it. For engineers who have been working in the FPGA environment, this is a readjustment and re-education. " The line between ASIC and FPGA is blurring, driven by applications that require flexibility, the system architecture combines programmability with hard-wired logic, and the tools that are now being applied to both. And this trend is unlikely to change quickly, because many new application areas that require these combinations are still in their infancy.

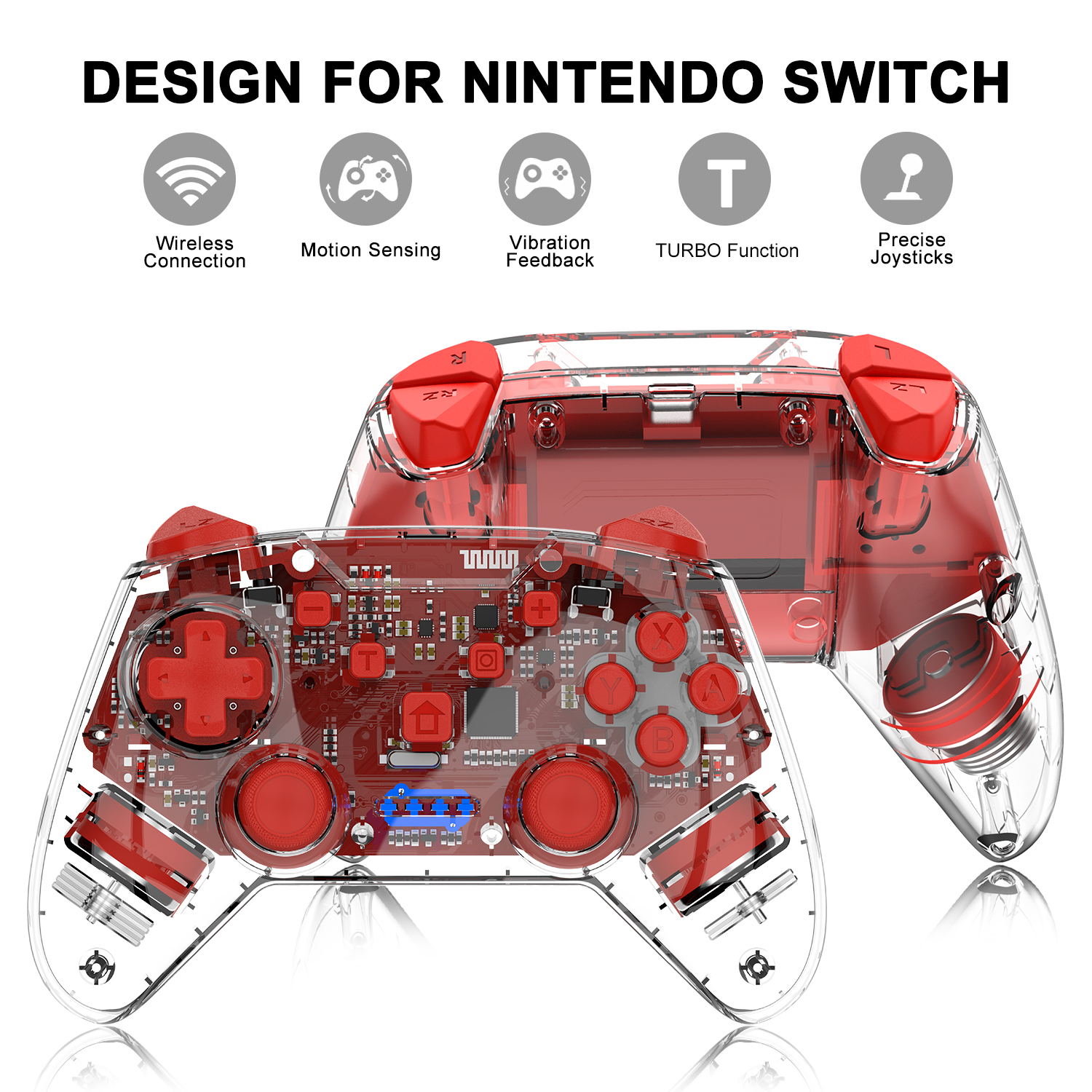

This Accessories Including agming controller For Xbox one , PS4 , PS3, Xbox 360, Niintendo Switch,Nintendo Switch Pro, WII U ETC,controller both have wired and wireless, Also have Nintendo Switch Replacement Joy-Cons,Many choicese. We also can customize for you, custimize your loog, package,carton etc.

Our these kinds controllers bring you great experience, wireless controller all have Turbo, Dual Vibration, Gyro Sensor function,, Nintendo Switch Joy-Cons Replcement to your original one, Function all same as original.

All controllers high quality with compatitive price, we test one by one with gaming console before shipping. here you can see our more detailes pictures.

Game Controllers,Ps3 Controller,Xbox 360 Controller,Wireless Gamepad Shenzhen GEME electronics Co,.Ltd , https://www.gemesz.com

Xilinx's Zynq-7000 SoC. Source: Xilinx